Инкрементальные бэкапы postgresql с pgbackrest

Содержание:

- Description

- Notes

- Description

- Notes

- 24.1.3. Handling Large Databases

- Возможные ошибки

- 24.1.2. Using pg_dumpall

- Description

- 24.1.3. Handling Large Databases

- 24.1.1. Restoring the Dump

- Notes

- Description

- Description

- Description

- 22.1.1. Restoring the dump

- Восстановление дампа PostgreSQL с помощью pg_dump

- Резервное копирование баз данных PostgreSQL. Как сделать автоматический бэкап PostgreSQL с помощью Bacula? Восстановление PostgreSQL.

- Description

- Дополнительная информация

- 23.1.3. Handling large databases

- Examples

- Notes

Description

pg_dumpall is a utility for

writing out («dumping») all

PostgreSQL databases of a

cluster into one script file. The script file contains

SQL commands that can be used

as input to psql to restore the databases. It does

this by calling pg_dump for each

database in a cluster. pg_dumpall also dumps global objects that

are common to all databases. (pg_dump does not save these objects.) This

currently includes information about database users and groups,

tablespaces, and properties such as access permissions that apply

to databases as a whole.

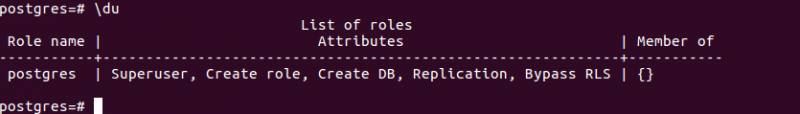

Since pg_dumpall reads tables

from all databases you will most likely have to connect as a

database superuser in order to produce a complete dump. Also you

will need superuser privileges to execute the saved script in

order to be allowed to add users and groups, and to create

databases.

The SQL script will be written to the standard output. Use the

option or shell operators to redirect it into a

file.

Notes

If your database cluster has any local additions to the database, be careful to restore the output of pg_dump into a truly empty database; otherwise you are likely to get errors due to duplicate definitions of the added objects. To make an empty database without any local additions, copy from not , for example:

CREATE DATABASE foo WITH TEMPLATE template0;

When a data-only dump is chosen and the option is used, pg_dump emits commands to disable triggers on user tables before inserting the data, and then commands to re-enable them after the data has been inserted. If the restore is stopped in the middle, the system catalogs might be left in the wrong state.

The dump file produced by pg_dump does not contain the statistics used by the optimizer to make query planning decisions. Therefore, it is wise to run after restoring from a dump file to ensure optimal performance; see and for more information.

Because pg_dump is used to transfer data to newer versions of PostgreSQL, the output of pg_dump can be expected to load into PostgreSQL server versions newer than pg_dump’s version. pg_dump can also dump from PostgreSQL servers older than its own version. (Currently, servers back to version 8.0 are supported.) However, pg_dump cannot dump from PostgreSQL servers newer than its own major version; it will refuse to even try, rather than risk making an invalid dump. Also, it is not guaranteed that pg_dump’s output can be loaded into a server of an older major version — not even if the dump was taken from a server of that version. Loading a dump file into an older server may require manual editing of the dump file to remove syntax not understood by the older server. Use of the option is recommended in cross-version cases, as it can prevent problems arising from varying reserved-word lists in different PostgreSQL versions.

Description

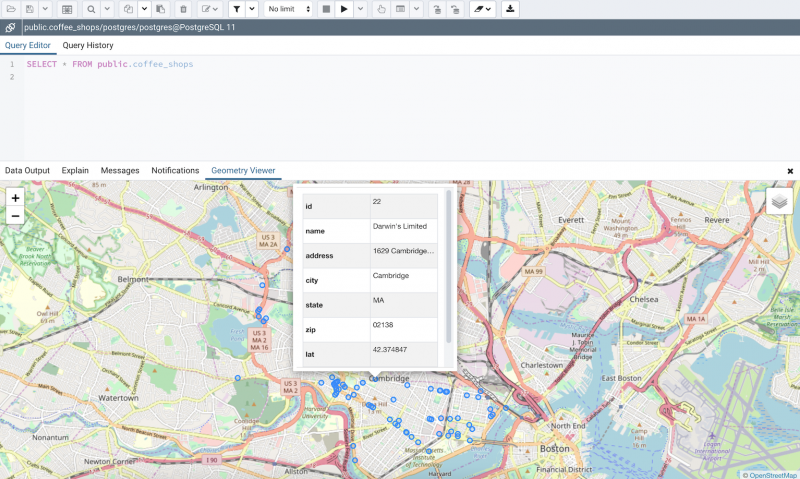

pg_dump is a utility for backing up a PostgreSQL database. It makes consistent backups even if the database is being used concurrently. pg_dump does not block other users accessing the database (readers or writers).

pg_dump only dumps a single database. To back up an entire cluster, or to back up global objects that are common to all databases in a cluster (such as roles and tablespaces), use pg_dumpall.

Dumps can be output in script or archive file formats. Script dumps are plain-text files containing the SQL commands required to reconstruct the database to the state it was in at the time it was saved. To restore from such a script, feed it to psql. Script files can be used to reconstruct the database even on other machines and other architectures; with some modifications, even on other SQL database products.

The alternative archive file formats must be used with pg_restore to rebuild the database. They allow pg_restore to be selective about what is restored, or even to reorder the items prior to being restored. The archive file formats are designed to be portable across architectures.

When used with one of the archive file formats and combined with pg_restore, pg_dump provides a flexible archival and transfer mechanism. pg_dump can be used to backup an entire database, then pg_restore can be used to examine the archive and/or select which parts of the database are to be restored. The most flexible output file formats are the “custom” format () and the “directory” format (). They allow for selection and reordering of all archived items, support parallel restoration, and are compressed by default. The “directory” format is the only format that supports parallel dumps.

Notes

If your database cluster has any local additions to the template1 database, be careful to restore the output of pg_dump into a truly empty database; otherwise you are likely to get errors due to duplicate definitions of the added objects. To make an empty database without any local additions, copy from template0 not template1, for example:

CREATE DATABASE foo WITH TEMPLATE template0;

When a data-only dump is chosen and the option --disable-triggers is used, pg_dump emits commands to disable triggers on user tables before inserting the data, and then commands to re-enable them after the data has been inserted. If the restore is stopped in the middle, the system catalogs might be left in the wrong state.

The dump file produced by pg_dump does not contain the statistics used by the optimizer to make query planning decisions. Therefore, it is wise to run ANALYZE after restoring from a dump file to ensure optimal performance; see and for more information. The dump file also does not contain any ALTER DATABASE ... SET commands; these settings are dumped by pg_dumpall, along with database users and other installation-wide settings.

24.1.3. Handling Large Databases

Some operating systems have maximum file size limits that

cause problems when creating large pg_dump output files. Fortunately,

pg_dump can write to the

standard output, so you can use standard Unix tools to work

around this potential problem. There are several possible

methods:

Use compressed dumps. You can use your favorite

compression program, for example gzip:

pg_dump dbname | gzip > filename.gz

Reload with:

gunzip -c filename.gz | psql dbname

or:

cat filename.gz | gunzip | psql dbname

Use split. The split command allows you to split the output

into smaller files that are acceptable in size to the

underlying file system. For example, to make chunks of 1

megabyte:

pg_dump dbname | split -b 1m - filename

Reload with:

cat filename* | psql dbname

Use pg_dump’s custom

dump format. If PostgreSQL was built on a system with

the zlib compression library

installed, the custom dump format will compress data as it

writes it to the output file. This will produce dump file

sizes similar to using gzip, but it

has the added advantage that tables can be restored

selectively. The following command dumps a database using the

custom dump format:

pg_dump -Fc dbname > filename

A custom-format dump is not a script for psql, but instead must be restored with

pg_restore, for example:

pg_restore -d dbname filename

See the pg_dump and pg_restore reference pages for

details.

For very large databases, you might need to combine

split with one of the other two

approaches.

Возможные ошибки

Input file appears to be a text format dump. please use psql.

Причина: дамп сделан в текстовом формате, поэтому нельзя использовать утилиту pg_restore.

Решение: восстановить данные можно командой psql <имя базы> < <файл с дампом> или выполнив SQL, открыв файл, скопировав его содержимое и вставив в SQL-редактор.

No matching tables were found

Причина: Таблица, для которой создается дамп не существует. Утилита pg_dump чувствительна к лишним пробелам, порядку ключей и регистру.

Решение: проверьте, что правильно написано название таблицы и нет лишних пробелов.

Причина: Утилита pg_dump чувствительна к лишним пробелам.

Решение: проверьте, что нет лишних пробелов.

Aborting because of server version mismatch

Причина: несовместимая версия сервера и утилиты pg_dump. Может возникнуть после обновления или при выполнении резервного копирования с удаленной консоли.

Решение: нужная версия утилиты хранится в каталоге /usr/lib/postgresql/<version>/bin/. Необходимо найти нужный каталог, если их несколько и запускать нужную версию. При отсутствии последней, установить.

No password supplied

Причина: нет системной переменной PGPASSWORD или она пустая.

Решение: либо настройте сервер для предоставление доступа без пароля в файле pg_hba.conf либо экспортируйте переменную PGPASSWORD (export PGPASSWORD или set PGPASSWORD).

24.1.2. Using pg_dumpall

pg_dump dumps only a single database at a time, and it does not dump information about roles or tablespaces (because those are cluster-wide rather than per-database). To support convenient dumping of the entire contents of a database cluster, the pg_dumpall program is provided. pg_dumpall backs up each database in a given cluster, and also preserves cluster-wide data such as role and tablespace definitions. The basic usage of this command is:

pg_dumpall > dumpfile

The resulting dump can be restored with psql:

psql -f dumpfile postgres

(Actually, you can specify any existing database name to start from, but if you are loading into an empty cluster then postgres should usually be used.) It is always necessary to have database superuser access when restoring a pg_dumpall dump, as that is required to restore the role and tablespace information. If you use tablespaces, make sure that the tablespace paths in the dump are appropriate for the new installation.

pg_dumpall works by emitting commands to re-create roles, tablespaces, and empty databases, then invoking pg_dump for each database. This means that while each database will be internally consistent, the snapshots of different databases are not sychronized.

Description

pg_dump is a utility for backing up a PostgreSQL database. It makes consistent backups even if the database is being used concurrently. pg_dump does not block other users accessing the database (readers or writers).

pg_dump only dumps a single database. To backup global objects that are common to all databases in a cluster, such as roles and tablespaces, use pg_dumpall.

Dumps can be output in script or archive file formats. Script dumps are plain-text files containing the SQL commands required to reconstruct the database to the state it was in at the time it was saved. To restore from such a script, feed it to psql. Script files can be used to reconstruct the database even on other machines and other architectures; with some modifications, even on other SQL database products.

The alternative archive file formats must be used with pg_restore to rebuild the database. They allow pg_restore to be selective about what is restored, or even to reorder the items prior to being restored. The archive file formats are designed to be portable across architectures.

When used with one of the archive file formats and combined with pg_restore, pg_dump provides a flexible archival and transfer mechanism. pg_dump can be used to backup an entire database, then pg_restore can be used to examine the archive and/or select which parts of the database are to be restored. The most flexible output file formats are the «custom» format (-Fc) and the «directory» format (-Fd). They allow for selection and reordering of all archived items, support parallel restoration, and are compressed by default. The «directory» format is the only format that supports parallel dumps.

24.1.3. Handling Large Databases

Some operating systems have maximum file size limits that cause problems when creating large pg_dump output files. Fortunately, pg_dump can write to the standard output, so you can use standard Unix tools to work around this potential problem. There are several possible methods:

Use compressed dumps. You can use your favorite compression program, for example gzip:

pg_dump dbname | gzip > filename.gz

Reload with:

gunzip -c filename.gz | psql dbname

or:

cat filename.gz | gunzip | psql dbname

Use split. The split command allows you to split the output into smaller files that are acceptable in size to the underlying file system. For example, to make chunks of 1 megabyte:

pg_dump dbname | split -b 1m - filename

Reload with:

cat filename* | psql dbname

Use pg_dump’s custom dump format. If PostgreSQL was built on a system with the zlib compression library installed, the custom dump format will compress data as it writes it to the output file. This will produce dump file sizes similar to using gzip, but it has the added advantage that tables can be restored selectively. The following command dumps a database using the custom dump format:

pg_dump -Fc dbname > filename

A custom-format dump is not a script for psql, but instead must be restored with pg_restore, for example:

pg_restore -d dbname filename

See the pg_dump and pg_restore reference pages for details.

For very large databases, you might need to combine split with one of the other two approaches.

24.1.1. Restoring the Dump

The text files created by pg_dump are intended to be read in by the

psql program. The general

command form to restore a dump is

psql dbname < infile

where infile is the file

output by the pg_dump command.

The database dbname will not be

created by this command, so you must create it yourself from

template0 before executing

psql (e.g., with createdb -T template0 dbname). psql supports options similar to

pg_dump for specifying the

database server to connect to and the user name to use. See the

psql reference page for more

information.

Before restoring an SQL dump, all the users who own objects

or were granted permissions on objects in the dumped database

must already exist. If they do not, the restore will fail to

recreate the objects with the original ownership and/or

permissions. (Sometimes this is what you want, but usually it

is not.)

By default, the psql script

will continue to execute after an SQL error is encountered. You

might wish to run psql with

the ON_ERROR_STOP variable set to

alter that behavior and have psql exit with an exit status of 3 if an

SQL error occurs:

psql --set ON_ERROR_STOP=on dbname < infile

Either way, you will only have a partially restored

database. Alternatively, you can specify that the whole dump

should be restored as a single transaction, so the restore is

either fully completed or fully rolled back. This mode can be

specified by passing the -1 or

--single-transaction command-line

options to psql. When using

this mode, be aware that even a minor error can rollback a

restore that has already run for many hours. However, that

might still be preferable to manually cleaning up a complex

database after a partially restored dump.

The ability of pg_dump and

psql to write to or read from

pipes makes it possible to dump a database directly from one

server to another, for example:

pg_dump -h host1 dbname | psql -h host2 dbname

Notes

If your database cluster has any local additions to the template1 database, be careful to restore the output of pg_dump into a truly empty database; otherwise you are likely to get errors due to duplicate definitions of the added objects. To make an empty database without any local additions, copy from template0 not template1, for example:

CREATE DATABASE foo WITH TEMPLATE template0;

When a data-only dump is chosen and the option --disable-triggers is used, pg_dump emits commands to disable triggers on user tables before inserting the data, and then commands to re-enable them after the data has been inserted. If the restore is stopped in the middle, the system catalogs might be left in the wrong state.

The dump file produced by pg_dump does not contain the statistics used by the optimizer to make query planning decisions. Therefore, it is wise to run ANALYZE after restoring from a dump file to ensure optimal performance; see and for more information. The dump file also does not contain any ALTER DATABASE ... SET commands; these settings are dumped by pg_dumpall, along with database users and other installation-wide settings.

Description

pg_dump is a utility for

backing up a PostgreSQL

database. It makes consistent backups even if the database is

being used concurrently. pg_dump

does not block other users accessing the database (readers or

writers).

Dumps can be output in script or archive file formats. Script

dumps are plain-text files containing the SQL commands required

to reconstruct the database to the state it was in at the time it

was saved. To restore from such a script, feed it to psql. Script

files can be used to reconstruct the database even on other

machines and other architectures; with some modifications, even

on other SQL database products.

The alternative archive file formats must be used with

pg_restore to rebuild the

database. They allow pg_restore

to be selective about what is restored, or even to reorder the

items prior to being restored. The archive file formats are

designed to be portable across architectures.

When used with one of the archive file formats and combined

with pg_restore, pg_dump provides a flexible archival and

transfer mechanism. pg_dump can

be used to backup an entire database, then pg_restore can be used to examine the

archive and/or select which parts of the database are to be

restored. The most flexible output file format is the

«custom» format (-Fc). It allows for selection and reordering of all

archived items, and is compressed by default.

Description

pg_dump is a utility for backing up a PostgreSQL database. It makes consistent backups even if the database is being used concurrently. pg_dump does not block other users accessing the database (readers or writers).

Dumps can be output in script or archive file formats. Script dumps are plain-text files containing the SQL commands required to reconstruct the database to the state it was in at the time it was saved. To restore from such a script, feed it to psql. Script files can be used to reconstruct the database even on other machines and other architectures; with some modifications, even on other SQL database products.

The alternative archive file formats must be used with pg_restore to rebuild the database. They allow pg_restore to be selective about what is restored, or even to reorder the items prior to being restored. The archive file formats are designed to be portable across architectures.

When used with one of the archive file formats and combined with pg_restore, pg_dump provides a flexible archival and transfer mechanism. pg_dump can be used to backup an entire database, then pg_restore can be used to examine the archive and/or select which parts of the database are to be restored. The most flexible output file formats are the «custom» format (-Fc) and the «directory» format (-Fd). They allow for selection and reordering of all archived items, support parallel restoration, and are compressed by default. The «directory» format is the only format that supports parallel dumps.

Description

pg_dump is a utility for

backing up a PostgreSQL

database. It makes consistent backups even if the database is

being used concurrently. pg_dump

does not block other users accessing the database (readers or

writers).

Dumps can be output in script or archive file formats. Script

dumps are plain-text files containing the SQL commands required

to reconstruct the database to the state it was in at the time it

was saved. To restore from such a script, feed it to psql. Script

files can be used to reconstruct the database even on other

machines and other architectures; with some modifications, even

on other SQL database products.

The alternative archive file formats must be used with

pg_restore to rebuild the

database. They allow pg_restore

to be selective about what is restored, or even to reorder the

items prior to being restored. The archive file formats are

designed to be portable across architectures.

When used with one of the archive file formats and combined

with pg_restore, pg_dump provides a flexible archival and

transfer mechanism. pg_dump can

be used to backup an entire database, then pg_restore can be used to examine the

archive and/or select which parts of the database are to be

restored. The most flexible output file format is the

«custom» format (-Fc). It allows for selection and reordering of all

archived items, and is compressed by default.

22.1.1. Restoring the dump

The text files created by pg_dump are intended to be read in by

the psql program. The

general command form to restore a dump is

psql dbname < infile

where infile is what you

used as outfile for the

pg_dump command. The

database dbname will not be

created by this command, you must create it yourself from

template0 before executing

psql (e.g., with createdb -T template0 dbname). psql supports options similar to

pg_dump for controlling the

database server location and the user name. See psql’s

reference page for more information.

Not only must the target database already exist before

starting to run the restore, but so must all the users who

own objects in the dumped database or were granted

permissions on the objects. If they do not, then the restore

will fail to recreate the objects with the original ownership

and/or permissions. (Sometimes this is what you want, but

usually it is not.)

Once restored, it is wise to run ANALYZE on each database so the

optimizer has useful statistics. An easy way to do this is to

run vacuumdb -a -z to VACUUM ANALYZE all databases; this is

equivalent to running VACUUM ANALYZE

manually.

The ability of pg_dump

and psql to write to or read

from pipes makes it possible to dump a database directly from

one server to another; for example:

pg_dump -h host1 dbname | psql -h host2 dbname

Восстановление дампа PostgreSQL с помощью pg_dump

Чтобы восстановить дамп данных, созданный при помощи pg_dump, можно перенаправить этот файл в стандартный ввод psql:

Примечание: эта операция перенаправления не создает требуемую базу данных. Ее нужно создать отдельно до запуска команды.

Для примера можно создать новую БД по имени restored_database, а затем перенаправить дамп по имени database.bak; для этого нужно выполнить:

При этом будет создана пустая БД на основе template0.

Для корректного восстановления дампа можно выполнить еще одно действие — воссоздать любого пользователя, который владеет или имеет привилегии на объекты в базе данных.

К примеру, если БД принадлежит пользователю test_user, то перед импортированием нужно создать его в восстанавливаемой системе:

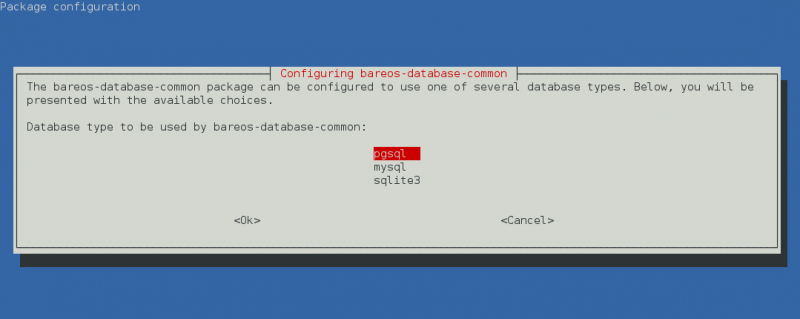

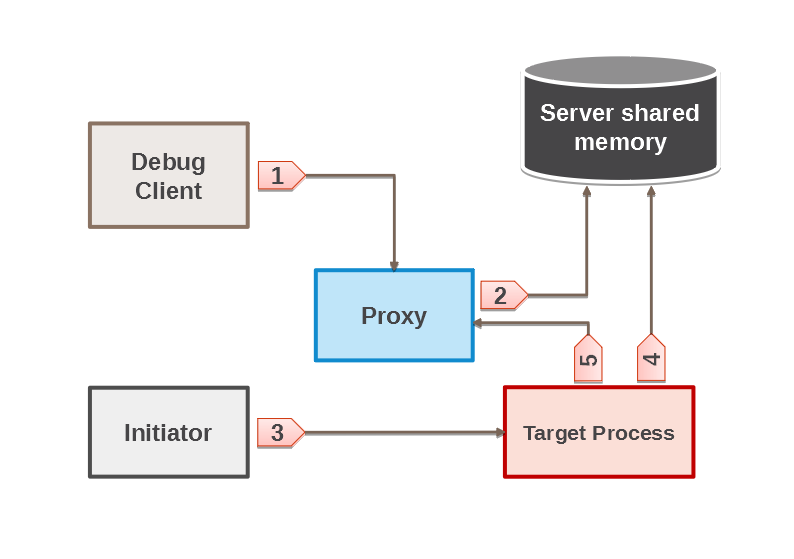

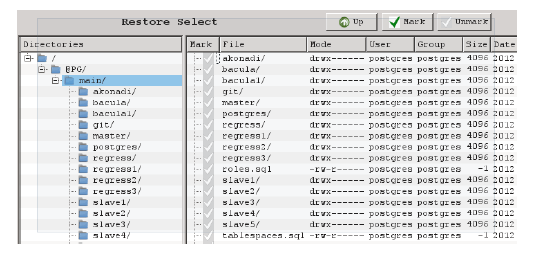

Резервное копирование баз данных PostgreSQL. Как сделать автоматический бэкап PostgreSQL с помощью Bacula? Восстановление PostgreSQL.

Резервное копирование PostgreSQL с помощью утилиты Bacula существенно упрощает и ускоряет процесс создания бэкапа базы PostgreSQL. При этом администратору не нужно обладать знаниями внутренних компонентов PostgreSQL для резервного копирования баз PostgreSQL и их восстановления и необязательно владеть навыками написания сложных скриптов резервного копирования PostgreSQL.

Бэкап PostgreSQL с помощью Bacula имеет широкую применимость. Bacula Enterprise Edition позволяет производить бэкап PostgreSQL на Windows, поскольку имеет соответствующий агент. С помощью данной утилиты также возможно резервное копирование базы 1С на PostgreSQL.

Утилита для создания бэкапов PostgreSQL автоматически создает резервную копию всей важной информации, например, конфигураций, определений пользователей, таблиц. Утилита для создания бэкапов базы PostgreSQL поддерживает технологию резервного копирования и восстановления PostgreSQL из дампа, а также технологию восстановления базы PostgreSQL из бэкапа до заданной контрольной точки

Ключевые преимущества резервного копирования и восстановления PostgreSQL с Bacula:

Возможность создания бэкапов и восстановления базы PostgreSQL с помощью дампов, а также поддержка технологии восстановления PostgreSQL до заданной контрольной точки (PITR)

В режиме восстановления PostegreSQL до заданной контрольной точки (PITR), утилита поддерживает создание как инкрементальных, так и дифференциальных бэкапов PostgreSQL

Утилита для бэкапа PostgreSQL автоматически запускает процедуру резервного копирования важной информации, такой как информация о конфигурации БД, определения пользователей, или табличные пространства

Резервное копирование PostgreSQL работает с базами 1С

Как сделать бэкап PostgreSQL: PITR или Dump?

| Custom | Dump | PITR | |

|---|---|---|---|

| Возможность восстанавливать отдельные объекты PostgreSQL (таблица, схема ) | Да | Нет | Нет |

| Скорость резервного копирования PostgreSQL | Медленно | Медленно | Быстро |

| Скорость восстановления PostgreSQL | Медленно | Очень Медленно | Быстро |

| Размер бэкапа PostgreSQL | Маленькая | Маленькая | Большая |

| Возможность восстановления PostgreSQL в любой точке времени | Нет | Нет | Да |

| Инкриментальные/Дифференциальные резервные копии PostgreSQL | Нет | Нет | Да |

| Резервное копирование PostgreSQL без остановки базы данных | Да | Да | Да |

| Консистентность бэкапа PostgreSQL | Да | Да | Да |

| Возможность восстановления на предыдущую версию PostgreSQL | Нет | Да | Да |

| Возможность восстановления на более новую версию PostgreSQL | Да | Да | Нет |

Чаще всего режимы резервного копирования баз PostgreSQL через Dump и PITR комбинируют.

Уровни резервного копирования PostgreSQL в PITR

Когда при бэкапе базы данных PostgreSQL используется режим PITR, при разных уровнях копирования (инкрементальный, дифференциальный, полный) будут выполнены следующие действия:

- При полном резервном копировании базы PostgreSQL плагин сделает полную резервную копию директории data и всех WAL файлов, которые есть на данный момент;

- При инкрементальном резервном копировании PostgreSQL будет переведен на новый WAL файл и будут сделаны резервные копии всех WAL файлов, которые были созданы с момента последнего резервного копирования баз данных PostgreSQL

- При дифференциальном бэкапе PostgreSQL будет сделана резервная копия всех WAL файлов и файлов data с момента последнего бэкапа PostgreSQL

Резервное копирование PostgreSQL в режиме Dump

Плагин для резервного копирования баз PostgreSQL генерирует следующие файлы в резервной копии для одной базы “test”:

| Файл | Контекст | Коммент |

|---|---|---|

| roles.sql | Глобальный | Список всех пользователей, их паролей и остальных опция |

| postgresql.conf | Глобальный | PostgreSQL конфигурация кластера |

| pg_hba.conf | Глобальный | Конфигурация подключений клиентов |

| pg_ident.conf | Глобальный | Конфигурация подключений клиентов |

| tablespaces.sql | Глобальный | Конфигураця TableSpace |

| createdb.sql | База данных | Скрипт создания базы данных |

| schema.sql | База данных | Схема Базы данных |

| data.sqlc | База данных | Дамп базы данных в формате “custom”(содержит все, что необходимо для восстановления) |

| data.sql | База данных | Резервная копия база данных в формате dump |

Автоматическое резервное копирование баз PostgreSQL доступно на всех 32/64-разрядных платформах Linux:

- CentOS5/6/7

- Redhat 5/6/7

- Ubuntu LTS

- Debian Squeeze

- Suse 11

Резервное копирование PostgreSQL запускается вместе с ПО Bacula Enterprise версии 6.0.6 или выше и поддерживает резервное копирование БД PostgreSQL версий 8.x, 9.0.x и 9.1.x..

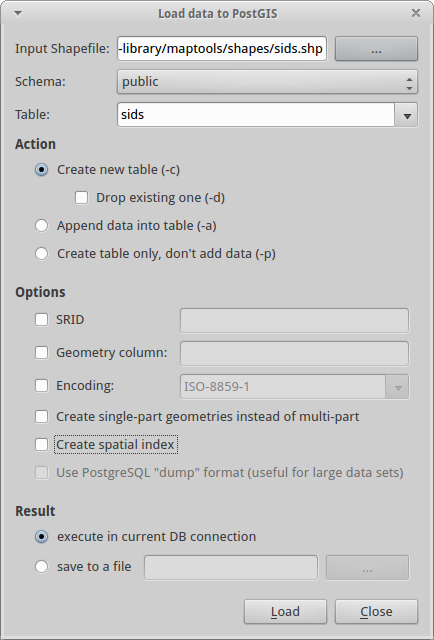

Description

pg_dumpall is a utility for writing out («dumping») all PostgreSQL databases of a cluster into one script file. The script file contains SQL commands that can be used as input to psql to restore the databases. It does this by calling pg_dump for each database in a cluster. pg_dumpall also dumps global objects that are common to all databases. (pg_dump does not save these objects.) This currently includes information about database users and groups, tablespaces, and properties such as access permissions that apply to databases as a whole.

Since pg_dumpall reads tables from all databases you will most likely have to connect as a database superuser in order to produce a complete dump. Also you will need superuser privileges to execute the saved script in order to be allowed to add users and groups, and to create databases.

The SQL script will be written to the standard output. Use the -f/--file option or shell operators to redirect it into a file.

Дополнительная информация

-

pg_dump

- Резервное копирование и восстановление в PostgreSQLПредположим что у нас есть postgresql в режиме потоковой репликации. master-сервер и hot-standby готовый заменить погибшего товарища. При плохом развитии событий, нам остается только создать trigger-файл и переключить наши приложения на работу с новым мастером. Однако, возможны ситуации когда вполне законные изменения были сделаны криво написанной миграцией и попали как на мастер, так и на подчиненный сервер. Например, были удалены/изменены данные в части таблиц или же таблицы были вовсе удалены. С точки зрения базы данных все нормально, а с точки зрения бизнеса — катастрофа. В таком случае провозглашение горячего hot-standby в мастера, процедура явно бесполезная… Для предостережения такой ситуации есть, как минимум, два варианта… использовать периодическое резервное копирование средствами pg_dump;использовать резервное копирование на основе базовых копий и архивов WAL.

- Резервное копирование баз данных в СУБД PostgreSQL (On-line backup)

23.1.3. Handling large databases

Since PostgreSQL allows

tables larger than the maximum file size on your system, it

can be problematic to dump such a table to a file, since the

resulting file will likely be larger than the maximum size

allowed by your system. Since pg_dump can write to the standard

output, you can just use standard Unix tools to work around

this possible problem.

Use compressed dumps. You can use your favorite

compression program, for example gzip.

pg_dump dbname | gzip > filename.gz

Reload with

createdb dbname gunzip -c filename.gz | psql dbname

or

cat filename.gz | gunzip | psql dbname

Use split. The

split command allows you to split

the output into pieces that are acceptable in size to the

underlying file system. For example, to make chunks of 1

megabyte:

pg_dump dbname | split -b 1m - filename

Reload with

createdb dbname cat filename* | psql dbname

Use the custom dump format. If PostgreSQL was built on a system with

the zlib compression

library installed, the custom dump format will compress

data as it writes it to the output file. This will produce

dump file sizes similar to using gzip, but it has the added advantage that

tables can be restored selectively. The following command

dumps a database using the custom dump format:

pg_dump -Fc dbname > filename

A custom-format dump is not a script for psql, but instead must be restored

with pg_restore. See the

pg_dump and pg_restore reference pages for

details.

Examples

To dump a database called mydb into a SQL-script file:

$ pg_dump mydb > db.sql

To reload such a script into a (freshly created) database named newdb:

$ psql -d newdb -f db.sql

To dump a database into a custom-format archive file:

$ pg_dump -Fc mydb > db.dump

To dump a database into a directory-format archive:

$ pg_dump -Fd mydb -f dumpdir

To dump a database into a directory-format archive in parallel with 5 worker jobs:

$ pg_dump -Fd mydb -j 5 -f dumpdir

To reload an archive file into a (freshly created) database named newdb:

$ pg_restore -d newdb db.dump

To dump a single table named mytab:

$ pg_dump -t mytab mydb > db.sql

To dump all tables whose names start with emp in the detroit schema, except for the table named employee_log:

$ pg_dump -t 'detroit.emp*' -T detroit.employee_log mydb > db.sql

To dump all schemas whose names start with east or west and end in gsm, excluding any schemas whose names contain the word test:

$ pg_dump -n 'east*gsm' -n 'west*gsm' -N '*test*' mydb > db.sql

The same, using regular expression notation to consolidate the switches:

$ pg_dump -n '(east|west)*gsm' -N '*test*' mydb > db.sql

To dump all database objects except for tables whose names begin with ts_:

$ pg_dump -T 'ts_*' mydb > db.sql

To specify an upper-case or mixed-case name in -t and related switches, you need to double-quote the name; else it will be folded to lower case (see ). But double quotes are special to the shell, so in turn they must be quoted. Thus, to dump a single table with a mixed-case name, you need something like

Notes

If your database cluster has any local additions to the

template1 database, be careful to restore

the output of pg_dump into a truly

empty database; otherwise you are likely to get errors due to

duplicate definitions of the added objects. To make an empty

database without any local additions, copy from template0 not template1,

for example:

CREATE DATABASE foo WITH TEMPLATE template0;

When a data-only dump is chosen and the option --disable-triggers is used, pg_dump emits commands to disable triggers on

user tables before inserting the data, and then commands to

re-enable them after the data has been inserted. If the restore is

stopped in the middle, the system catalogs might be left in the

wrong state.

The dump file produced by pg_dump does not contain the statistics used

by the optimizer to make query planning decisions. Therefore, it is

wise to run ANALYZE after restoring from a

dump file to ensure optimal performance; see

and

for more information. The dump file also does not contain any

ALTER DATABASE ... SET commands; these

settings are dumped by pg_dumpall, along with database users and

other installation-wide settings.