Продолжаем ускорять блог на wordpress

Содержание:

- Maxconn

- Заметаем следы

- net.ipv4.tcp_rmem

- Кто-то наследил…

- net.ipv4.tcp_wmem

- Как перевести системные часы в UTC или localtime и наоборот?¶

- Analysis, Design and Optimization of Rugate Filters

- Questions and Comments from Users

- Fabrication of Rugate Filters

- Reverse Path Filter(rp_filter) settings in Red hat 5/Centos 5 mahcines

- Optical Properties of Rugate Filters

- Луковая маршрутизация

- INFO

- TCP port range

- What is reverse path filtering?

- Процесс ksoftirqd съедает все ресурсы системы. Что делать?¶

- Угроза извне

- Configuration tips that enable serving a higher number of concurrent users

- Number of open files

Maxconn

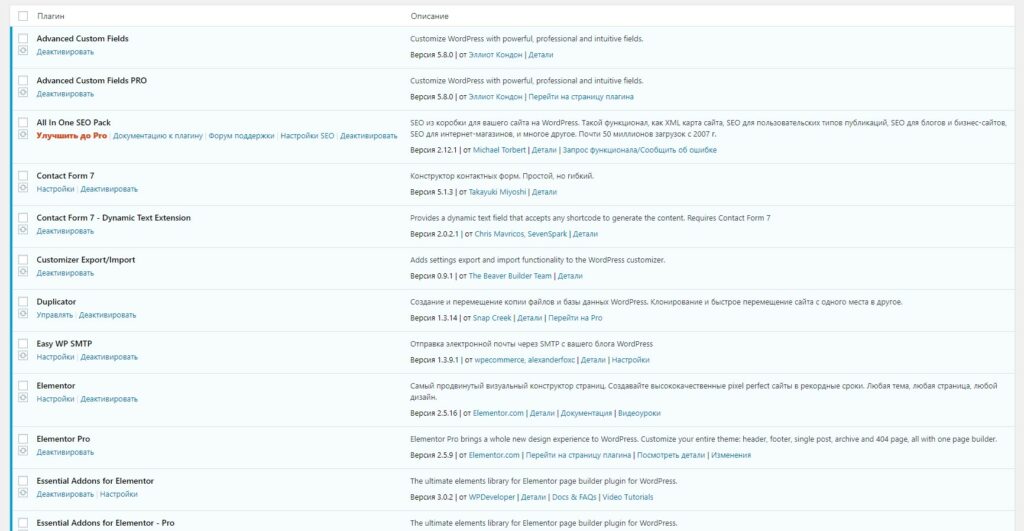

The default number of maximum connections is provided during HAProxy’s compilation. The package available on my Ubuntu 16.04 was compiled with 2000 maximum connections. This is quite low, but can be changed in the configuration. First thing you need to know, however, is that there are actually three maxconn values in the config. A sample config might look like this:

global maxconn 100000 (...)frontend frontend1 bind *:80 mode http maxconn 100000 default_backend backend1 (...)backend backend1 server frontend-1 192.168.1.1:80 maxconn=200 server frontend-2 192.168.1.2:80 maxconn=200 balance roundrobin (...)

There’s a very good answer on Stack Overflow illustrating how these values work, which I recommend reading. The short version is that both the global and the frontend maxconn values are by default equal to 2000, so you have to configure both, and they are configured per-process. So with configured to 4 and global set to 100k your HAProxy server will accept 400k connections. The remainder won’t be rejected, but instead queued in the kernel.

There’s also a per-server maxconn, which by default is not limited. This configuration option allows you to have a smaller number of active connections to each backend server. The rest gets queued in HAProxy internal queues. With a smaller number of connections reaching the servers, every request will get processed more quickly, so even though requests get queued on HAProxy their overall journey duration might actually be shorter. See this article to find some practical examples.

Заметаем следы

ОK, пароли в надежном месте, личные файлы тоже, что теперь? А теперь мы должны позаботиться о том, чтобы какие-то куски наших личных данных не попали в чужие руки. Ни для кого не секрет, что при удалении файла его актуальное содержимое остается на носителе даже в том случае, если после этого произвести форматирование. Наши зашифрованные данные будут в сохранности даже после стирания, но как быть с флешками и прочими картами памяти? Здесь нам пригодится утилита srm, которая не просто удаляет файл, но и заполняет оставшиеся после него блоки данных мусором:

Как всегда, все просто до безобразия. Далее, если речь идет о всем носителе, то можно воспользоваться старым добрым dd:

Эта команда сотрет все данные на флешке sdb. Далее останется создать таблицу разделов (с одним разделом) и отформатировать в нужную ФС. Использовать для этого рекомендуется fdisk и mkfs.vfat, но можно обойтись и графическим gparted.

Предотвращение BruteForce-атак

В Ubuntu/Debian для установки набираем:

Конфиги находятся в каталоге /etc/fail2ban. После изменения конфигурации следует перезапускать fail2ban командой:

net.ipv4.tcp_rmem

source man tcp

tcp_rmem (since Linux 2.4) This is a vector of 3 integers: .

These parameters are used by TCP to regulate receive buffer sizes. TCP dynamically adjusts the size of the receive buffer from the defaults listed below, in the range of these values, depending on memory available in the system.

min Minimum size of the receive buffer used by each TCP socket. The default value is the system page size. On Linux 2.4, the default value is 4K, lowered to PAGE_SIZE bytes in low-memory systems.

This value is used to ensure that in memory pressure mode, allocations below this size will still succeed. This is not used to bound the size of the receive buffer declared using SO_RCVBUF on a socket.

default The default size of the receive buffer for a TCP socket. The default value is 87380 bytes. (On Linux 2.4, this will be lowered to 43689 in low-memory systems.)

This value overwrites the initial default buffer size from the generic global net.core.rmem_default defined for all protocols.

If larger receive buffer sizes are desired, this value should be increased (to affect all sockets). To employ large TCP windows, the net.ipv4.tcp_window_scaling must be enabled (default).

max The maximum size of the receive buffer used by each TCP socket. The default value is calculated using the formula: max(87380, min(4MB, tcp_mem*PAGE_SIZE/128)). (On Linux 2.4, the default is 87380*2 bytes, lowered to 87380 in low-memory systems).

This value does not override the global net.core.rmem_max. This is not used to limit the size of the receive buffer declared using SO_RCVBUF on a socket.

To modify tcp_rmem min, default, and max values, edit sysctl.conf and use the following format

net.ipv4.tcp_rmem = 4096 12582912 16777216

Кто-то наследил…

Кто-то особенно умный смог обойти наш брандмауэр, пройти мимо Snort, получить права root в системе и теперь ходит в систему регулярно, используя установленный бэкдор. Нехорошо, бэкдор надо найти, удалить, а систему обновить. Для поиска руткитов и бэкдоров используем rkhunter:

Запускаем:

Софтина проверит всю систему на наличие руткитов и выведет на экран результаты. Если зловред все-таки найдется, rkhunter укажет на место и его можно будет затереть. Более детальный лог располагается здесь: /var/log/rkhunter.log. Запускать rkhunter лучше в качестве cron-задания ежедневно:

Базу rkhunter рекомендуется время от времени обновлять с помощью такой команды:

Ее, кстати, можно добавить перед командой проверки в cron-сценарий. Еще два инструмента поиска руткитов:

По сути, те же яйца Фаберже с высоты птичьего полета, но базы у них различные. Возможно, с их помощью удастся выявить то, что пропустил rkhunter. Ну и на закуску debsums — инструмент для сверки контрольных сумм файлов, установленных пакетов с эталоном. Ставим:

Запускаем проверку:

Как всегда? запуск можно добавить в задания cron.

rkhunter за работой

В моей системе руткитов нет

net.ipv4.tcp_wmem

tcp_wmem (since Linux 2.4) This is a vector of 3 integers: .

These parameters are used by TCP to regulate send buffer sizes. TCP dynamically adjusts the size of the send buffer from the default values listed below, in the range of these values, depending on memory available.

min Minimum size of the send buffer used by each TCP socket. The default value is the system page size. (On Linux 2.4, the default value is 4K bytes.)

This value is used to ensure that in memory pressure mode, allocations below this size will still succeed. This is not used to bound the size of the send buffer declared using SO_SNDBUF on a socket.

default The default size of the send buffer for a TCP socket. This value overwrites the initial default buffer size from the generic global net.core.wmem_default defined for all protocols.

The default value is 16K bytes.If larger send buffer sizes are desired, this value should be increased (to affect all sockets).

To employ large TCP windows, the /proc/sys/net/ipv4/tcp_window_scaling must be set to a non-zero value (default).

max The maximum size of the send buffer used by each TCP socket. This value does not override the value in /proc/sys/net/core/wmem_max. This is not used to limit the size of the send buffer declared using SO_SNDBUF on a socket.

The default value is calculated using the formula: max(65536, min(4MB, tcp_mem*PAGE_SIZE/128))

On Linux 2.4, the default value is 128K bytes, lowered 64K depending on low-memory systems.)

To modify tcp_wmem min, default, and max values, edit sysctl.conf and use the following format

net.ipv4.tcp_wmem = 4096 12582912 16777216

Как перевести системные часы в UTC или localtime и наоборот?¶

Localtime – это хранение в UEFI BIOS компьютера времени с учётом установленного в системе часового пояса. При определённых условиях это может вызывать проблемы с синхронизацией времени, а также работой нескольких операционных систем на одном компьютере.

UTC – это хранение в UEFI BIOS компьютера всемирного координированного времени по Гринвичу без учёта часовых поясов. Часовыми поясами управляет операционная система, что позволяет каждому пользователю в системе, а также приложениям использовать .

Переключение аппаратных часов компьютера в UTC из localtime:

sudo timedatectl set-local-rtc no

Переключение аппаратных часов компьютера в localtime из UTC:

Analysis, Design and Optimization of Rugate Filters

For a theoretical analysis, a gradient-index coating structure can be approximated by a step-index structure with a larger number of steps, such that the index change from one “layer” to the next one becomes very small.

Therefore, conventional software developed for step-index structures can in principle be used.

However, one has to deal with a large number of “layers” and requires an efficient method to specify the structure, as it is not convenient to enter hundreds or thousands of externally computed refractive index values into such software.

One will usually desire to have the whole structure automatically computed from some set of parameters such as a medium refractive index, an oscillation amplitude, and parameters for apodization.

Also, it is often necessary to combine the rugate structure with additional parts such as anti-reflection coatings.

Simple filter curves can be obtained with analytical designs.

For more complex designs, one can use the inverse Fourier transform method, where one essentially exploits the fact that at least for low reflectivities, the reflection spectrum is related to the Fourier transform of the spatial index profile.

The method can be modified to work also with high reflectivities .

There are also other techniques for the numerical optimization of rugate filters.

Here, one will usually not optimize layer thickness values, as is common for step-index structures, but rather the details of the refractive index profile.

The most convenient approach is usually obtained when the structure can be parametrized as explained above.

One then varies these parameters such as to minimize some kind of merit function, which “punishes” deviations from the desired optical properties.

Questions and Comments from Users

Here you can submit questions and comments. As far as they get accepted by the author, they will appear above this paragraph together with the author’s answer. The author will decide on acceptance based on certain criteria. Essentially, the issue must be of sufficiently broad interest.

By submitting the information, you give your consent to the potential publication of your inputs on our website according to our rules. (If you later retract your consent, we will delete those inputs.) As your inputs are first reviewed by the author, they may be published with some delay.

Bibliography

| J. A. Dobrowolski and D. Lowe, “Optical thin film synthesis program based on the use of Fourier transforms”, Appl. Opt. 17 (19), 3039 (1978), doi:10.1364/AO.17.003039 | |

| P. G. Verly et al., “Synthesis of high rejection filters with the Fourier transform method”, Appl. Opt. 28 (14), 2864 (1989), doi:10.1364/AO.28.002864 | |

| W. J. Gunning et al., “Codeposition of continuous composition rugate filters”, Appl. Opt. 28 (14), 2945 (1989), doi:10.1364/AO.28.002945 | |

| B. G. Bovard, “Rugate filter design: the modified Fourier transform technique”, Appl. Opt. 29 (1), 24 (1990), doi:10.1364/AO.29.000024 | |

| H. Fabricius, “Gradient-index filters: designing filters with steep skirts, high reflection, and quintic matching layers”, Appl. Opt. 31 (25), 5191 (1992), doi:10.1364/AO.31.005191 | |

| J. R. Jacobsson, “Review of the optical properties of inhomogeneous thin films”, Proc. SPIE 2046, 2 (1993) | |

| B. A. Tirri et al., “Gradient index film fabrication using optical control techniques”, Proc. SPIE 2046, 224 (1993) | |

| J.-S. Chen et al., “Mixed films of TiO2-SiO2 deposited by double electron-beam coevaporation”, Appl. Opt. 35 (1), 90 (1996), doi:10.1364/AO.35.000090 | |

| J.-G. Yoon et al, “Structural and optical properties of TiO2-SiO2 composite films prepared by aerosol-assisted chemical-vapor deposition”, J. Korean Phys. Soc. 33 (6), 699 (1998) | |

| K. Kaminska et al., “Simulating structure and optical response of vacuum evaporated porous rugate filters”, J. Appl. Phys. 95 (6), 3055 (2004), doi:10.1063/1.1649804 | |

| M. Jerman et al., “Refractive index of thin films of SiO2, ZrO2, and HfO2 as a function of the films’ mass density”, Appl. Opt. 44 (15), 3006 (2005), doi:10.1364/AO.44.003006 | |

| M. Jupé et al., “Laser-induced damage in gradual index layers and Rugate filters”, Proc. SPIE 6403, 640311 (2006), doi:10.1117/12.696130 | |

| A. V. Tikhonravov et al., “New optimization algorithm for the synthesis of rugate optical coatings”, Appl. Opt. 45 (7), 1515 (2006), doi:10.1364/AO.45.001515 | |

| A. Thelen, Design of Optical Interference Coatings, McGraw–Hill (1989) | |

| Development of a rugate filter with the RP Coating software |

See also: optical filters, dielectric coatings, Bragg mirrors, fiber Bragg gratingsand other articles in the categories ,

Fabrication of Rugate Filters

There are different techniques for obtaining a continuous variation of the refractive index in a dielectric coating:

- The probably most common approach is to fabricate mixtures of two different coating materials with a variable mixing ratio.

For that purpose, one may use double electron beam coevaporation or similar methods (also using resistance heating or ion beam sputtering) with material pairs such as ZrO2 / MgO, ZrO2 / SiO2, Ta2O5 / TiO2 or TiO2 / SiO2.

Depending on the detailed growth conditions (composition, substrate temperature, etc.), polycrystalline or amorphous structures can result. - For some coating materials such as TiO2, the packing density can be varied during vapor deposition, e.g. by control of the oxygen partial pressure or by glancing angle deposition.

The packing density directly affects the refractive index .

Such techniques are also applied to porous silicon rugate filters .

A challenge arises from the fact that a precise refractive index control is more difficult to obtain for gradient-index structures.

For high precision, automatic computer control is required, based on online growth monitoring and a sophisticated algorithm.

When deviations from the target values are detected during growth, the rest of the structure is automatically adapted such as to compensate the errors as far as possible.

Reverse Path Filter(rp_filter) settings in Red hat 5/Centos 5 mahcines

In Linux machine’s Reverse Path filtering is handled by sysctl, like many other kernel settings.

The current value on your machine can be found from the following method.

# cat /proc/sys/net/ipv4/conf/default/rp_filter 1 #

Let’s understand the boolean values for rp_filter first then go ahead with configuration.

1 indicates, that the kernel will do source validation by confirming reverse path.

indicates, no source validation.

The previously shown output of /proc/sys/net/ipv4/conf/default/rp_filter indicates the default value of Reverse path filtering for any new interface.

You can also enable reverse path filtering only on your desired interface, because each interface has got different rp_filter files.

# cd /proc/sys/net/ipv4/conf/ # ll total 0 dr-xr-xr-x 2 root root 0 Feb 28 05:12 all dr-xr-xr-x 2 root root 0 Feb 28 05:12 default dr-xr-xr-x 2 root root 0 Feb 28 05:12 eth0 dr-xr-xr-x 2 root root 0 Feb 28 05:12 lo

All the folder’s in the above shown output has the file rp_filter. I will recommend enabling it by modifying the file /proc/sys/net/ipv4/conf/all/rp_filter file, if you Reverse filtering very strictly. This can be done by simply redirecting your desired boolean value(1 or 0) to the desired file.

# echo 1 > /proc/sys/net/ipv4/conf/all/rp_filter #

Now restart your network for the new configuration to take effect.

However editing file’s inside /proc is not at all a good practice. So you can do this by editing sysctl.conf file

# sysctl -w "net.ipv4.conf.all.rp_filter=1" net.ipv4.conf.all.rp_filter = 1

Replace «all» with default,eth0,<or any interface name of your wish>

Optical Properties of Rugate Filters

In comparison with filters based on standard dielectric coatings, rugate filters provide some special potentials:

- A sinusoidal oscillation of the refractive index can create an isolated peak in the reflectance spectrum, without any significant sidebands as are obtained for ordinary Bragg mirrors.

Such sidebands essentially arise from the higher-order Fourier components of a rectangular oscillation.

However, a clean filter behavior of that type requires two additional measures: avoiding additional reflections from the ends and apodization.

Ref. gives an example. - It is possible to linearly superimpose multiple oscillations of the refractive index in order to combine multiple reflection features.

- Rugate filters have also been reported to have substantially higher laser-induced damage thresholds , compared with conventional filters.

Луковая маршрутизация

Что такое луковая маршрутизация? Это Tor. А Tor, в свою очередь, — это система, которая позволяет создать полностью анонимную сеть с выходом в интернет. Термин «луковый» здесь применен относительно модели работы, при которой любой сетевой пакет будет «обернут» в три слоя шифрования и пройдет на пути к адресату через три ноды, каждая из которых будет снимать свой слой и передавать результат дальше

Все, конечно, сложнее, но для нас важно только то, что это один из немногих типов организации сети, который позволяет сохранить полную анонимность

Тем не менее, где есть анонимность, там есть и проблемы соединения. И у Tor их как минимум три: он чудовищно медленный (спасибо шифрованию и передаче через цепочку нод), он будет создавать нагрузку на твою сеть (потому что ты сам будешь одной из нод), и он уязвим для перехвата трафика. Последнее — естественное следствие возможности выхода в интернет из Tor-сети: последняя нода (выходная) будет снимать последний слой шифрования и может получить доступ к данным.

Тем не менее Tor очень легко установить и использовать:

Все, теперь на локальной машине будет прокси-сервер, ведущий в сеть Tor. Адрес: 127.0.0.1:9050, вбить в браузер можно с помощью все того же расширения, ну или добавить через настройки. Имей в виду, что это SOCKS, а не HTTP-прокси.

Tor говорит, что он не HTTP-прокси

INFO

Версия Tor для Android называется Orbot.

Чтобы введенный в командной строке пароль не был сохранен в истории, можно использовать хитрый трюк под названием «добавь в начале команды пробел».

Именно ecryptfs используется для шифрования домашнего каталога в Ubuntu.

Борьба с флудом

Приведу несколько команд, которые могут помочь при флуде твоего хоста.

Подсчет количества коннектов на определенный порт:

Подсчет числа «полуоткрытых» TCP-соединений:

Просмотр списка IP-адресов, с которых идут запросы на подключение:

Анализ подозрительных пакетов с помощью tcpdump:

Дропаем подключения атакующего:

Ограничиваем максимальное число «полуоткрытых» соединений с одного IP к конкретному порту:

Отключаем ответы на запросы ICMP ECHO:

Вот и все. Не вдаваясь в детали и без необходимости изучения мануалов мы создали Linux-box, который защищен от вторжения извне, от руткитов и прочей заразы, от непосредственно вмешательства человека, от перехвата трафика и слежки. Остается лишь регулярно обновлять систему, запретить парольный вход по SSH, убрать лишние сервисы и не допускать ошибок конфигурирования.

TCP port range

This is relevant if you’re configuring a loadbalancer or a reverse proxy. In this scenario you may run into an issue called TCP source port exhaustion. If you’re not using some sort of connection pooling or multiplexing, then in general each connection from a client to the loadbalancer also opens a related connection to one of the backends. This will open a socket on the loadbalancer’s system. Each socket is identified by the following 5-tuple:

- Protocol (we assume here, that this is always TCP)

- Source IP

- Source port

- Destination IP

- Destination port

You cannot have 2 sockets that are identified by the same 5-tuple on the system. The problem is TCP only has 65535 ports available. So in a scenario where the reverse proxy only has a single IP address and is proxying to a single backend on a single IP and port, we’re looking at 1*1*65535*1*1 unique combinations. The actual number is actually lower, because by default Linux will only use range 32768-60999 as the source ports for outgoing connections. We can increase this, but the first 1024 are reserved, so in the end we set it to a range of 1024–65535. This is done with , using the same process as described before — writing the value to or and executing

net.ipv4.ip_local_port_range=1024 65535

With an effective number of ports equal to 64511 ports, we have more breathing room, but in certain situations it might still not be enough. In that case you can look into increasing the number of other items in the 5-tuple:

- Configure more than one IP on the loadbalancer system. Make sure the loadbalancer is configured to also use these additional IPs

- Configure the destination backend to listen on multiple IPs and configure the loadbalancer to connect to these IPs

- Configure the destination to listen on multiple ports, if possible

Increasing the number of source IPs on the loadbalancer is most likely the easiest option available. In HAProxy this is possible by configuring the option in the server line of the backend. If the backend is on the local system, it might be easier to tweak the destination IPs, as every request to any IP in the 127.0.0.0/8 pool will go to localhost without any additional configuration required.

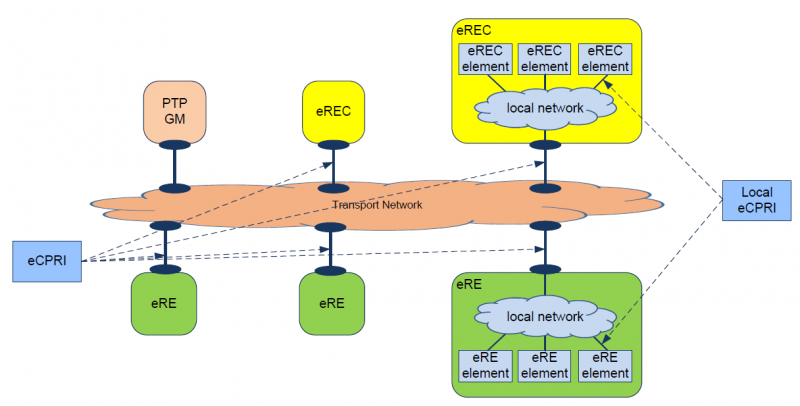

What is reverse path filtering?

Reverse path filtering is a mechanism adopted by the Linux kernel, as well as most of the networking devices out there to check whether a receiving packet source address is routable.

So in other words, when a machine with reverse path filtering enabled recieves a packet, the machine will first check whether the source of the recived packet is reachable through the interface it came in.

- If it is routable through the interface which it came, then the machine will accept the packet

- If it is not routable through the interface, which it came, then the machine will drop that packet.

Latest red hat machine’s will give you one more option. This option is kind of liberal in terms of accepting traffic.

If the recieved packet’s source address is routable through any of the interfaces on the machine, the machine will accept the packet.

Процесс ksoftirqd съедает все ресурсы системы. Что делать?¶

Ядро операционной системы взаимодействует с устройствами посредством прерываний. Когда возникает новое прерывание, оно немедленно приостанавливает работу текущего выполняемого процесса, переключается в режим ядра и начинает его обработку.

Может случиться так, что прерывания будут генерироваться настолько часто, что ядро не сможет их обрабатывать немедленно, в порядке получения. На этот случай имеется специальный механизм, помещающий полученные прерывания в очередь для дальнейшей обработки. Этой очередью управляет особый поток ядра ksoftirqd (создаётся по одному на каждый имеющийся процессор или ядро многоядерного процессора).

Угроза извне

Теперь позаботимся об угрозах, исходящих из недр всемирной паутины. Здесь я должен был бы начать рассказ об iptables и pf, запущенном на выделенной машине под управлением OpenBSD, но все это излишне, когда есть ipkungfu. Что это такое? Это скрипт, который произведет за нас всю грязную работу по конфигурированию брандмауэра, без необходимости составлять километровые списки правил. Устанавливаем:

Правим конфиг:

Для включения ipkungfu открываем файл /etc/default/ipkungfu и меняем строку IPKFSTART = 0 на IPKFSTART = 1. Запускаем:

Дополнительно внесем правки в /etc/sysctl.conf:

Активируем изменения:

Configuration tips that enable serving a higher number of concurrent users

Pawel ChmielinskiFollow

Feb 27, 2017 · 10 min read

If you have ever configured a loadbalancer like HAProxy or a webserver like Nginx or Apache to handle a high number of concurrent users, then you might have discovered that there are quite a few tweaks required in order to achieve the desired effects. Could you recite all of them from the top of your head? If not, don’t worry — this article has got you covered.

We will have a look at two types of tweaks. First ones are Linux kernel tweaks, which are common regardless if you’re using HAProxy, Nginx, Apache or other webservers. The second type covers HAProxy specific configuration.

Number of open files

Why should we care about open files when handling web traffic? It’s simple — every incoming or outgoing connection needs to open a socket and each socket is a file on a Linux system. If you’re configuring a webserver serving static content from the local filesystem, then each connection will result in one open socket. However, if you’re configuring a loadbalancer serving content from backend servers then each incoming connections will open a minimum of two sockets, or even more, depending on the loadbalancing configuration.

It’s important that you configure the maximum number of open files, as the default number is pretty low. On Ubuntu 16.04 it’s up to 4096 open files per process, which is not an awful lot. You know that you have hit the limit if you see lines in your logs.

Now, there are two ways to configure max open files, depending on whether your distribution uses systemd or not. Most tutorials found on Google assume systemd is not used, in which case the number of open files can be set by editing (assuming pam_limits is used for daemon processes, see this answer for a more thorough explanation). A sample config to set both the soft and hard limits for every user on the system to 100k would look like this:

* soft nofile 100000* hard nofile 100000root soft nofile 100000root hard nofile 100000

Afterwards restart your webserver/loadbalancer to apply the changes. You can check if it worked by issuing:

cat /proc/<PID_of_webserver>/limits

If the daemon process doesn’t use pam_limits, it won’t work. A bit hacky workaround is to use directly in the init script or any of the files sourced inside it, like on Ubuntu.

If you’re on a system that uses systemd you will find that setting doesn’t work as well. That’s because systemd doesn’t use the at all, but instead uses it’s own configuration to determine the limits. However, keep in mind that even with systemd, is still useful when running a long-running process from within a user shell, as the user limits still use the old config file. These can be displayed by issuing:

ulimit -a

Okay, how do we configure maximum open files for systemd? The answer is to override the configuration for a specific service. We do this by placing a file in . The file content could look like this to set a 100k max open files limit:

LimitNOFILE=100000

After the change we have to reload systemd configuration and restart our service:

systemctl daemon-reloadsystemctl restart <service_name>

To make sure that the override worked use the following:

systemctl cat <service_name>cat /proc/<PID>/limits

This should work fine for Apache and Nginx, but if you’re running HAProxy, you’re in for a surprise. When we restart HAProxy, there are actually 3 processes spawned and only the top-level one () has our limits applied! That’s because HAProxy configured it’s open files limit automatically based on the value in . We will have a look at this parameter further down the article.

There are two other values that relate to maximum open files — global values for the system. These can be checked by issuing:

sysctl fs.file-maxsysctl fs.nr_open

determines the maximum number of files in total that can be opened on the system. determines the maximum value that can be configured to. On modern distributions both are configured to high values, but if you find that’s not the case for your system, then feel free to tweak them as well. In any case make sure that they are configured much higher the the value used in and/or , because we don’t want a single process to be able to block the operating system from opening files.