Lxml 4.4.0

Содержание:

- Dealing with stylesheet complexity

- Unescaping XML

- ETXPath

- What standards does lxml implement?

- How can I sort the attributes?

- Elements carry attributes as a dict

- Parsing XML with SAX APIs

- My program crashes when run with mod_python/Pyro/Zope/Plone/…

- XPath サポート¶

- Who uses lxml?

- Parsing the Book Example¶

- Other ways to increase performance

- Serialization

- Who uses lxml?

- Finding elements quickly

Dealing with stylesheet complexity

Some applications require a larger set of rather diverse stylesheets.

lxml.etree allows you to deal with this in a number of ways. Here are

some ideas to try.

The most simple way to reduce the diversity is by using XSLT

parameters that you pass at call time to configure the stylesheets.

The partial() function in the functools module

may come in handy here. It allows you to bind a set of keyword

arguments (i.e. stylesheet parameters) to a reference of a callable

stylesheet. The same works for instances of the XPath()

evaluator, obviously.

You may also consider creating stylesheets programmatically. Just

create an XSL tree, e.g. from a parsed template, and then add or

replace parts as you see fit. Passing an XSL tree into the XSLT()

constructor multiple times will create independent stylesheets, so

later modifications of the tree will not be reflected in the already

created stylesheets. This makes stylesheet generation very straight

forward.

Unescaping XML

The xml.sax.saxutils module provides an unescape() function as well. This function converts the &, >, and < entity references back to the corresponding characters:

>>> from xml.sax.saxutils import unescape

>>>

>>> unescape("< & >")

'< & >'

Note that the predefined entities ' and " are not supported by default. Like the escape() and quoteattr() functions, unescape() can be provided with an additional mapping of replacements that should be performed. This can be used to add support for the additional predefined entities:

>>> unescape("' "", {"'": "'", """: '"'})

'\'"'

This can also be used to perform replacements for longer strings.

Note that the unescape() function does not deal with arbitrary character references. This could be accomplished by passing in a really large mapping as the second argument, but that’s pretty silly given the size of the mapping that’s required to support both decimal and hexadecimal character references (and the hexadecimal references containing A-F would need to be accounted for in all permutations of upper and lower case, and leading zeros would need to be considered). If we want character references to be considered, we can use the Expat XML parser included with all recent versions of Python. This function will do the trick:

import xml.parsers.expat

def unescape(s):

want_unicode = False

if isinstance(s, unicode):

s = s.encode("utf-8")

want_unicode = True

# the rest of this assumes that `s` is UTF-8

list = []

# create and initialize a parser object

p = xml.parsers.expat.ParserCreate("utf-8")

p.buffer_text = True

p.returns_unicode = want_unicode

p.CharacterDataHandler = list.append

# parse the data wrapped in a dummy element

# (needed so the "document" is well-formed)

p.Parse("<e>", )

p.Parse(s, )

p.Parse("</e>", 1)

# join the extracted strings and return

es = ""

if want_unicode:

es = u""

return es.join(list)

Note the extra work we have to go to so that the result has the same type as the input; this came for free with the .replace()-based approaches.

Using this unescape() function provides support for character references and the predefined entities, but does not let us extend the mapping with additional entity definitions (a more elaborate function could make that possible, though). Assuming we’ve imported this from whatever module we stored it in, we get:

>>> unescape("abc")

'abc'

>>> unescape(u"abc")

u'abc'

>>> unescape("abc")

'abc'

We also get support for constructs that we might not want in some contexts, though these are probably acceptable since we’re looking at XML data:

>>> unescape("a<!]>c")

'abc'

>>> unescape("a<!--wow!-->bc<!--this is really long-->")

'abc'

ETXPath

ElementTree supports a language named ElementPath in its find*() methods.

One of the main differences between XPath and ElementPath is that the XPath

language requires an indirection through prefixes for namespace support,

whereas ElementTree uses the Clark notation ({ns}name) to avoid prefixes

completely. The other major difference regards the capabilities of both path

languages. Where XPath supports various sophisticated ways of restricting the

result set through functions and boolean expressions, ElementPath only

supports pure path traversal without nesting or further conditions. So, while

the ElementPath syntax is self-contained and therefore easier to write and

handle, XPath is much more powerful and expressive.

lxml.etree bridges this gap through the class ETXPath, which accepts XPath

expressions with namespaces in Clark notation. It is identical to the

XPath class, except for the namespace notation. Normally, you would

write:

>>> root = etree.XML("<root xmlns='ns'><a><b/></a><b/></root>")

>>> find = etree.XPath("//p:b", namespaces={'p' 'ns'})

>>> print(find(root)[.tag)

{ns}b

ETXPath allows you to change this to:

What standards does lxml implement?

The compliance to XML Standards depends on the support in libxml2 and libxslt.

Here is a quote from http://xmlsoft.org/:

lxml currently supports libxml2 2.6.20 or later, which has even better

support for various XML standards. The important ones are:

- XML 1.0

- HTML 4

- XML namespaces

- XML Schema 1.0

- XPath 1.0

- XInclude 1.0

- XSLT 1.0

- EXSLT

- XML catalogs

- canonical XML

- RelaxNG

- xml:id

- xml:base

Support for XML Schema is currently not 100% complete in libxml2, but

is definitely very close to compliance. Schematron is supported in

two ways, the best being the original ISO Schematron reference

implementation via XSLT. libxml2 also supports loading documents

through HTTP and FTP.

How can I sort the attributes?

lxml preserves the order in which attributes were originally created.

There is one case in which this is difficult: when attributes are passed

in a dict or as keyword arguments to the Element() factory. Before Python

3.6, dicts had no predictable order.

Since Python 3.6, however, dicts also preserve the creation order of their keys,

and lxml makes use of that since release 4.4.

In earlier versions, lxml tries to assure at least reproducible output by

sorting the attributes from the dict before creating them. All sequential

ways to set attributes keep their order and do not apply sorting. Also,

OrderedDict instances are recognised and not sorted.

In cases where you cannot control the order in which attributes are created,

you can still change it before serialisation. To sort them by name, for example,

you can apply the following function:

Elements carry attributes as a dict

XML elements support attributes. You can create them directly in the Element

factory:

>>> root = etree.Element("root", interesting="totally")

>>> etree.tostring(root)

b'<root interesting="totally"/>'

Attributes are just unordered name-value pairs, so a very convenient way

of dealing with them is through the dictionary-like interface of Elements:

>>> print(root.get("interesting"))

totally

>>> print(root.get("hello"))

None

>>> root.set("hello", "Huhu")

>>> print(root.get("hello"))

Huhu

>>> etree.tostring(root)

b'<root interesting="totally" hello="Huhu"/>'

>>> sorted(root.keys())

>>> for name, value in sorted(root.items()):

... print('%s = %r' % (name, value))

hello = 'Huhu'

interesting = 'totally'

For the cases where you want to do item lookup or have other reasons for

getting a ‘real’ dictionary-like object, e.g. for passing it around,

you can use the attrib property:

>>> attributes = root.attrib

>>> print(attributes"interesting"])

totally

>>> print(attributes.get("no-such-attribute"))

None

>>> attributes"hello" = "Guten Tag"

>>> print(attributes"hello"])

Guten Tag

>>> print(root.get("hello"))

Guten Tag

Note that attrib is a dict-like object backed by the Element itself.

This means that any changes to the Element are reflected in attrib

and vice versa. It also means that the XML tree stays alive in memory

as long as the attrib of one of its Elements is in use. To get an

independent snapshot of the attributes that does not depend on the XML

tree, copy it into a dict:

Parsing XML with SAX APIs

SAX is a standard interface for event-driven XML parsing. Parsing XML with SAX generally requires you to create your own ContentHandler by subclassing xml.sax.ContentHandler.

Your ContentHandler handles the particular tags and attributes of your flavor(s) of XML. A ContentHandler object provides methods to handle various parsing events. Its owning parser calls ContentHandler methods as it parses the XML file.

The methods startDocument and endDocument are called at the start and the end of the XML file. The method characters(text) is passed character data of the XML file via the parameter text.

The ContentHandler is called at the start and end of each element. If the parser is not in namespace mode, the methods startElement(tag, attributes) and endElement(tag) are called; otherwise, the corresponding methods startElementNS and endElementNS are called. Here, tag is the element tag, and attributes is an Attributes object.

Here are other important methods to understand before proceeding −

My program crashes when run with mod_python/Pyro/Zope/Plone/…

These environments can use threads in a way that may not make it obvious when

threads are created and what happens in which thread. This makes it hard to

ensure lxml’s threading support is used in a reliable way. Sadly, if problems

arise, they are as diverse as the applications, so it is difficult to provide

any generally applicable solution. Also, these environments are so complex

that problems become hard to debug and even harder to reproduce in a

predictable way. If you encounter crashes in one of these systems, but your

code runs perfectly when started by hand, the following gives you a few hints

for possible approaches to solve your specific problem:

-

make sure you use recent versions of libxml2, libxslt and lxml. The

libxml2 developers keep fixing bugs in each release, and lxml also

tries to become more robust against possible pitfalls. So newer

versions might already fix your problem in a reliable way. Version

2.2 of lxml contains many improvements. -

make sure the library versions you installed are really used. Do

not rely on what your operating system tells you! Print the version

constants in lxml.etree from within your runtime environment to

make sure it is the case. This is especially a problem under

MacOS-X when newer library versions were installed in addition to

the outdated system libraries. Please read the bugs section

regarding MacOS-X in this FAQ. -

if you use mod_python, try setting this option:

-

in a threaded environment, try to initially import lxml.etree

from the main application thread instead of doing first-time imports

separately in each spawned worker thread. If you cannot control the

thread spawning of your web/application server, an import of

lxml.etree in sitecustomize.py or usercustomize.py may still do

the trick. -

compile lxml without threading support by running setup.py with the

--without-threading option. While this might be slower in certain

scenarios on multi-processor systems, it might also keep your application

from crashing, which should be worth more to you than peek performance.

Remember that lxml is fast anyway, so concurrency may not even be worth it. -

look out for fancy XSLT stuff like foreign document access or

passing in subtrees trough XSLT variables. This might or might not

work, depending on your specific usage. Again, later versions of

lxml and libxslt provide safer support here. -

try copying trees at suspicious places in your code and working with

those instead of a tree shared between threads. Note that the

copying must happen inside the target thread to be effective, not in

the thread that created the tree. Serialising in one thread and

parsing in another is also a simple (and fast) way of separating

thread contexts. -

try keeping thread-local copies of XSLT stylesheets, i.e. one per thread,

instead of sharing one. Also see the question above. -

you can try to serialise suspicious parts of your code with explicit thread

locks, thus disabling the concurrency of the runtime system.

XPath サポート¶

このモジュールは木構造内の要素の位置決めのための XPath 式 を限定的にサポートしています。その目指すところは短縮構文のほんの一部だけのサポートであり、XPath エンジンのフルセットは想定していません。

使用例

以下はこのモジュールの XPath 機能の一部を紹介する例です。 節から XML 文書 を使用します:

import xml.etree.ElementTree as ET

root = ET.fromstring(countrydata)

# Top-level elements

root.findall(".")

# All 'neighbor' grand-children of 'country' children of the top-level

# elements

root.findall("./country/neighbor")

# Nodes with name='Singapore' that have a 'year' child

root.findall(".//year/..")

# 'year' nodes that are children of nodes with name='Singapore'

root.findall(".//*/year")

# All 'neighbor' nodes that are the second child of their parent

root.findall(".//neighbor")

For XML with namespaces, use the usual qualified notation:

# All dublin-core "title" tags in the document

root.findall(".//{http://purl.org/dc/elements/1.1/}title")

Who uses lxml?

As an XML library, lxml is often used under the hood of in-house

server applications, such as web servers or applications that

facilitate some kind of content management. Many people who deploy

Zope, Plone or Django use it together with lxml in the background,

without speaking publicly about it. Therefore, it is hard to get an

idea of who uses it, and the following list of ‘users and projects we

know of’ is very far from a complete list of lxml’s users.

Also note that the compatibility to the ElementTree library does not

require projects to set a hard dependency on lxml — as long as they do

not take advantage of lxml’s enhanced feature set.

-

cssutils,

a CSS parser and toolkit, can be used with lxml.cssselect -

Deliverance,

a content theming tool -

Enfold Proxy 4,

a web server accelerator with on-the-fly XSLT processing -

Inteproxy,

a secure HTTP proxy -

lwebstring,

an XML template engine -

openpyxl,

a library to read/write MS Excel 2007 files -

OpenXMLlib,

a library for handling OpenXML document meta data -

PsychoPy,

psychology software in Python -

Pycoon,

a WSGI web development framework based on XML pipelines -

pycsw,

an OGC CSW server implementation written in Python -

PyQuery,

a query framework for XML/HTML, similar to jQuery for JavaScript -

python-docx,

a package for handling Microsoft’s Word OpenXML format -

Rambler,

news aggregator on Runet -

rdfadict,

an RDFa parser with a simple dictionary-like interface. -

xupdate-processor,

an XUpdate implementation for lxml.etree -

Diazo,

an XSLT-under-the-hood web site theming engine

Zope3 and some of its extensions have good support for lxml:

-

gocept.lxml,

Zope3 interface bindings for lxml -

z3c.rml,

an implementation of ReportLab’s RML format -

zif.sedna,

an XQuery based interface to the Sedna OpenSource XML database

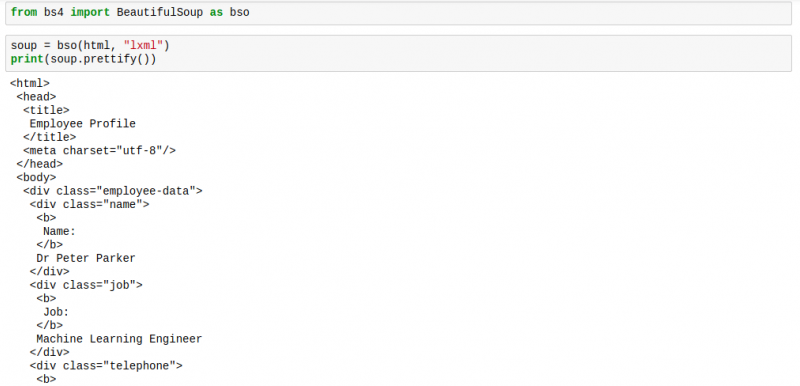

Parsing the Book Example¶

Well, the result of that example was kind of boring. Most of the time, you want to save the data you extract and do something with it, not just print it out to stdout. So for our next example, we’ll create a data structure to contain the results. Our data structure for this example will be a list of dicts. We’ll use the MSDN book example here from the earlier chapter again. Save the following XML as example.xml

<?xml version="1.0"?>

<catalog>

<book id="bk101">

<author>Gambardella, Matthew</author>

<title>XML Developer's Guide</title>

<genre>Computer</genre>

<price>44.95</price>

<publish_date>2000-10-01</publish_date>

<description>An in-depth look at creating applications

with XML.</description>

</book>

<book id="bk102">

<author>Ralls, Kim</author>

<title>Midnight Rain</title>

<genre>Fantasy</genre>

<price>5.95</price>

<publish_date>2000-12-16</publish_date>

<description>A former architect battles corporate zombies,

an evil sorceress, and her own childhood to become queen

of the world.</description>

</book>

<book id="bk103">

<author>Corets, Eva</author>

<title>Maeve Ascendant</title>

<genre>Fantasy</genre>

<price>5.95</price>

<publish_date>2000-11-17</publish_date>

<description>After the collapse of a nanotechnology

society in England, the young survivors lay the

foundation for a new society.</description>

</book>

</catalog>

Now let’s parse this XML and put it in our data structure!

from lxml import etree

def parseBookXML(xmlFile):

with open(xmlFile) as fobj

xml = fobj.read()

root = etree.fromstring(xml)

book_dict = {}

books = []

for book in root.getchildren():

for elem in book.getchildren():

if not elem.text

text = "None"

else

text = elem.text

print(elem.tag + " => " + text)

book_dictelem.tag = text

if book.tag == "book"

books.append(book_dict)

book_dict = {}

return books

if __name__ == "__main__"

parseBookXML("books.xml")

This example is pretty similar to our last one, so we’ll just focus on the differences present here. Right before we start iterating over the context, we create an empty dictionary object and an empty list. Then inside the loop, we create our dictionary like this:

book_dictelem.tag = text

The text is either elem.text or None. Finally, if the tag happens to be book, then we’re at the end of a book section and need to add the dict to our list as well as reset the dict for the next book. As you can see, that is exactly what we have done. A more realistic example would be to put the extracted data into a Book class. I have done the latter with json feeds before.

Other ways to increase performance

In addition to the use of specific methods within lxml, you can

use approaches outside of the library to influence execution speed. Some

of these are simple code changes; others require new thinking about how to

handle large data problems.

Psyco

The Psyco module is an often-missed way to increase the speed of Python

applications with minimal work. Typical gains for a pure Python program

are between two and four times, but lxml does most of its work in C, so

the difference is unusually small. When I ran with Psyco enabled, I reduced runtime by only three seconds

(43.9 seconds versus 47.3 seconds). Psyco has a large memory overhead

which might even negate any gains if the machine has to go to swap.

If your lxml-driven application has core pure Python code that’s executed

frequently (perhaps extensive string manipulation on text nodes), you

might benefit if you enable Psyco for only those methods. For more

information about Psyco, see .

Threading

If, instead, your application relies mostly on internal, C-driven lxml

features, it might be to your advantage to run it as a threaded

application in a multiprocessor environment. There are restrictions on how

to start the threads—especially with XSLT. Consult the FAQ section

on threads in the lxml documentation for more information.

Divide and conquer

If it is possible to divide extremely large documents into individually

analyzable subtrees, then it becomes feasible to split the document at the

subtree level (using lxml’s fast serialization) and distribute the work on

those files among multiple computers. Employing on-demand virtual servers

is an increasingly popular solution for executing central processing unit

(CPU) bound offline tasks.

Serialization

If all you need to do with an XML file is grab some text from within a

single node, it might be possible to use a simple regular expression that

will probably operate faster than any XML parser. In practice, though,

this is nearly impossible to get right when the data is at all complex,

and I do not recommend it. XML libraries are invaluable when true data

manipulation is required.

Serializing XML to a string or file is where lxml excels because it relies

on C code directly. If your task requires any

serialization at all, lxml is a clear choice, but there are some tricks to

get the best performance out of the library.

Use when serializing

subtrees

lxml retains references between child nodes and their parents. One effect

of this is that a node in lxml can have one and only one parent.

(cElementTree has no concept of parent nodes.)

takes each in

the copyright file and writes a simplified record containing only the

title and the copyright information.

Listing 6. Serialize an element’s

children

from lxml import etree

import deepcopy

def serialize(elem):

# Output a new tree like:

# <SimplerRecord>

# <Title>This title</Title>

# <Copyright><Date>date</Date><Id>id</Id></Copyright>

# </SimplerRecord>

# Create a new root node

r = etree.Element('SimplerRecord')

# Create a new child

t = etree.SubElement(r, 'Title')

# Set this child's text attribute to the original text contents of <Title>

t.text = elem.iterchildren(tag='Title').next().text

# Deep copy a descendant tree

for c in elem.iterchildren(tag='Copyright'):

r.append( deepcopy(c) )

return r

out = open('titles.xml', 'w')

context = etree.iterparse('copyright.xml', events=('end',), tag='Record')

# Iterate through each of the <Record> nodes using our fast iteration method

fast_iter(context,

# For each <Record>, serialize a simplified version and write it

# to the output file

lambda elem:

out.write(

etree.tostring(serialize(elem), encoding='utf-8')))

Don’t use to simply replicate the text of a single

node. It’s faster to create a new node, populate its text attribute

manually, and then serialize it. In my tests, calling

for both and

was 15 percent slower than the code in . You’ll see the greatest performance

boosts from when serializing large descendant trees.

When benchmarked against cElementTree using the code in , lxml’s serializer was almost twice as

fast (50 seconds versus 95 seconds):

Listing 7. Serializing with

cElementTree

def serialize_cet(elem):

r = cet.Element('Record')

# Create a new element with the same text child

t = cet.SubElement(r, 'Title')

t.text = elem.find('Title').text

# ElementTree does not store parent references -- an element can

# exist in multiple trees. It's not necessary to use deepcopy here.

for c in elem.findall('Copyright'):

r.append(h)

return r

context = cet.iterparse('copyright.xml', events=('end','start'))

context = iter(context)

event, root = context.next()

for event, elem in context:

if elem.tag == 'Record' and event =='end':

result = serialize_cet(elem)

out.write(cet.tostring(result, encoding='utf-8'))

root.clear()

For more information about this iteration pattern, see «Incremental

Parsing» of the ElementTree documentation. (See for a link.)

Who uses lxml?

As an XML library, lxml is often used under the hood of in-house

server applications, such as web servers or applications that

facilitate some kind of content management. Many people who deploy

Zope, Plone or Django use it together with lxml in the background,

without speaking publicly about it. Therefore, it is hard to get an

idea of who uses it, and the following list of ‘users and projects we

know of’ is very far from a complete list of lxml’s users.

Also note that the compatibility to the ElementTree library does not

require projects to set a hard dependency on lxml — as long as they do

not take advantage of lxml’s enhanced feature set.

-

cssutils,

a CSS parser and toolkit, can be used with lxml.cssselect -

Deliverance,

a content theming tool -

Enfold Proxy 4,

a web server accelerator with on-the-fly XSLT processing -

Inteproxy,

a secure HTTP proxy -

lwebstring,

an XML template engine -

openpyxl,

a library to read/write MS Excel 2007 files -

OpenXMLlib,

a library for handling OpenXML document meta data -

PsychoPy,

psychology software in Python -

Pycoon,

a WSGI web development framework based on XML pipelines -

pycsw,

an OGC CSW server implementation written in Python -

PyQuery,

a query framework for XML/HTML, similar to jQuery for JavaScript -

python-docx,

a package for handling Microsoft’s Word OpenXML format -

Rambler,

a meta search engine that aggregates different data sources -

rdfadict,

an RDFa parser with a simple dictionary-like interface. -

xupdate-processor,

an XUpdate implementation for lxml.etree -

Diazo,

an XSLT-under-the-hood web site theming engine

Zope3 and some of its extensions have good support for lxml:

-

gocept.lxml,

Zope3 interface bindings for lxml -

z3c.rml,

an implementation of ReportLab’s RML format -

zif.sedna,

an XQuery based interface to the Sedna OpenSource XML database

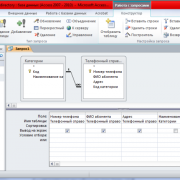

Finding elements quickly

After parsing, the most common XML task is to locate specific data of

interest inside the parsed tree. lxml offers several approaches, from a

simplified search syntax to full XPath 1.0. As a user, you should be aware

of the performance characteristics and optimization techniques for each

approach.

Avoid use of and

The and methods, inherited from the

ElementTree API, locate one or more descendant nodes using a simplified

XPath-like expression language called ElementPath. Users migrating from

ElementTree to lxml can naturally continue to use the find/ElementPath

syntax.

lxml supplies two other options for discovering subnodes: the

/ methods and true

XPath. In cases where the expression should match a node name, it is far

faster (in some cases twice as fast) to use the

or methods with their optional tag parameter

when compared to their equivalent ElementPath expressions.

For more complex patterns, use the class to precompile

search patterns. Simple patterns that mimic the behavior of

with tag arguments (for example,

) execute in effectively the same

time as their equivalents. It’s important to

precompile, though. Compiling the pattern in each execution of the loop or

using the method on an element (described in the lxml

documentation, see ) can be

almost twice as slow as compiling once and then using that pattern

repeatedly.

XPath evaluation in lxml is fast. If only a subset of nodes needs

to be serialized, it is much better to limit with precise XPath

expressions up front than to inspect all the nodes later. For example,

limiting the sample serialization to include only titles containing the

word , as in , takes 60

percent of the time to serialize the full set.

Listing 8. Conditional serialization with XPath

classes

def write_if_node(out, node):

if node is not None:

out.write(etree.tostring(node, encoding='utf-8'))

def serialize_with_xpath(elem, xp1, xp2):

'''Take our source <Record> element and apply two pre-compiled XPath classes.

Return a node only if the first expression matches.

'''

r = etree.Element('Record')

t = etree.SubElement(r, 'Title')

x = xp1(elem)

if x:

t.text = x.text

for c in xp2(elem):

r.append(deepcopy(c))

return r

xp1 = etree.XPath("child::Title")

xp2 = etree.XPath("child::Copyright")

out = open('out.xml', 'w')

context = etree.iterparse('copyright.xml', events=('end',), tag='Record')

fast_iter(context,

lambda elem: write_if_node(out, serialize_with_xpath(elem, xp1, xp2)))

Finding nodes in other parts of the

document

Note that, even when using , it is possible to use

XPath predicates based on looking ahead of the current node. To

find all nodes that are immediately followed

by a record whose title contains the word , do this:

etree.XPath("Title[contains(../Record/following::Record/Title/text(), 'night')]")

However, when using the memory-efficient iteration strategy described in

, this command returns nothing

because preceding nodes are cleared as parsing proceeds through the

document:

etree.XPath("Title[contains(../Record/preceding::Record/Title/text(), 'night')]")

While it is possible to write an efficient algorithm to solve this

particular problem, tasks involving analyses across

nodes—especially those that might be randomly distributed in the

document—are usually more suited for an XML database that uses

XQuery, such as eXist.