Лазанья домашняя. классический рецепт

Содержание:

- Etymology

- How to Make Lasagna

- See also

- Sword Art Online: Alicization Lycoris не запускается. Ошибка при запуске. Решение

- Файлы, драйверы и библиотеки

- Sword Art Online: Alicization Lycoris выдает ошибку об отсутствии DLL-файла. Решение

- Что сделать в первую очередь

- GPU support¶

- Run the MNIST example¶

- Where to go from here¶

- Lasagne

- Предисловие или лирическое отступление о библиотеках для глубокого обучения

- Основы

- Prerequisites¶

- Quickly retrieve forgotten or lost passwords from the most popular web browsers, email clients and even your computer’s system with the help of this command-line tool

- Lasagne or lasagna?

- Lasagne

Etymology

In Ancient Rome, there was a dish similar to a traditional lasagne called lasana or lasanum (Latin for ‘container’ or ‘pot’) described in the book De re coquinaria by Marcus Gavius Apicius, but the word could have a more ancient origin. The first theory is that lasagne comes from Greek λάγανον (laganon), a flat sheet of pasta dough cut into strips. The word λαγάνα (lagana) is still used in Greek to mean a flat thin type of unleavened bread baked for the holiday Clean Monday.

Another theory is that the word lasagne comes from the Greek λάσανα (lasana) or λάσανον (lasanon) meaning ‘trivet’, ‘stand for a pot’ or ‘chamber pot’. The Romans borrowed the word as lasanum, meaning ‘cooking pot’. The Italians used the word to refer to the cookware in which lasagne is made. Later, the food took on the name of the serving dish.

Another proposed link, or reference, is the 14th-century English dish loseyn as described in The Forme of Cury, a cookbook prepared by «the chief Master Cooks of King Richard II», which included English recipes as well as dishes influenced by Spanish, French, Italian, and Arab cuisines. This dish has similarities to modern lasagne in both its recipe, which features a layering of ingredients between pasta sheets, and its name. An important difference is the lack of tomatoes, which did not arrive in Europe until after Columbus reached America in 1492. The earliest discussion of the tomato in European literature appeared in a herbal written in 1544 by Pietro Andrea Mattioli, while the earliest cookbook found with tomato recipes was published in Naples in 1692, but the author had apparently obtained these recipes from Spanish sources.

As with most other types of pasta, the Italian word is a plural form: lasagne meaning more than one sheet of lasagna, though in many other languages a derivative of the singular word lasagna is used for the popular baked pasta dish. Regional usage in Italy, when referring to the baked dish, favours the plural form lasagne in the north of the country and the singular lasagna in the south. The former, plural usage has influenced the usual spelling found in British English, while the southern Italian, singular usage has influenced the spelling often used in American English.

How to Make Lasagna

For this recipe, we are essentially making a thick, meaty tomato sauce and layering that with noodles and cheese into a casserole. Here’s the run-down:

- Start by making the sauce with ground beef, bell peppers, onions, and a combo of tomato sauce, tomato paste, and crushed tomatoes. The three kinds of tomatoes gives the sauce great depth of flavor.

- Let this simmer while you boil the noodles and get the cheeses ready. We’re using ricotta, shredded mozzarella, and parmesan — like the mix of tomatoes, this 3-cheese blend gives the lasagna great flavor!

- From there, it’s just an assembly job. A cup of meat sauce, a layer of noodles, more sauce, followed by a layer of cheese. Repeat until you have three layers and have used up all the ingredients.

- Bake until bubbly and you’re ready to eat!

See also

- Italy portal

- Food portal

- Baked ziti – a baked Italian dish with macaroni and sauce

- Casserole

- Crozets de Savoie – a type of small, square-shaped pasta made in the Savoie region in France

- King Ranch chicken – a casserole also known as «Texas Lasagna»

- – inadvertent corrosion caused by improper storage of lasagna

- Lasagnette – a narrower form of the pasta

- Lazanki – a type of small square- or rectangle-shaped pasta made in Poland and Belarus

- Moussaka – a Mediterranean casserole that is layered in some recipes

- Pastelón – a baked, layered Puerto Rican dish made with plantains

- Pastitsio – a baked, layered Mediterranean pasta dish

- Timballo – an Italian casserole

- List of Italian dishes

- List of casserole dishes

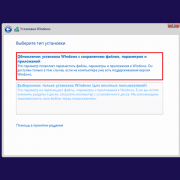

Sword Art Online: Alicization Lycoris не запускается. Ошибка при запуске. Решение

SWORD ART ONLINE Alicization Lycoris установилась, но попросту отказывается работать. Как быть?

Выдает ли Sword Art Online: Alicization Lycoris какую-нибудь ошибку после вылета? Если да, то какой у нее текст? Возможно, она не поддерживает вашу видеокарту или какое-то другое оборудование? Или ей не хватает оперативной памяти?

Помните, что разработчики сами заинтересованы в том, чтобы встроить в игры систему описания ошибки при сбое. Им это нужно, чтобы понять, почему их проект не запускается при тестировании.

Обязательно запишите текст ошибки. Если вы не владеете иностранным языком, то обратитесь на официальный форум разработчиков SWORD ART ONLINE Alicization Lycoris. Также будет полезно заглянуть в крупные игровые сообщества и, конечно, в наш FAQ.

Если Sword Art Online: Alicization Lycoris не запускается, мы рекомендуем вам попробовать отключить ваш антивирус или поставить игру в исключения антивируса, а также еще раз проверить соответствие системным требованиям и если что-то из вашей сборки не соответствует, то по возможности улучшить свой ПК, докупив более мощные комплектующие.

Файлы, драйверы и библиотеки

Практически каждое устройство в компьютере требует набор специального программного обеспечения. Это драйверы, библиотеки и прочие файлы, которые обеспечивают правильную работу компьютера.

- Скачать драйвер для видеокарты Nvidia GeForce

- Скачать драйвер для видеокарты AMD Radeon

Driver Updater

- загрузите Driver Updater и запустите программу;

- произведите сканирование системы (обычно оно занимает не более пяти минут);

- обновите устаревшие драйверы одним щелчком мыши.

существенно увеличить FPSAdvanced System Optimizer

- загрузите Advanced System Optimizer и запустите программу;

- произведите сканирование системы (обычно оно занимает не более пяти минут);

- выполните все требуемые действия. Ваша система работает как новая!

Когда с драйверами закончено, можно заняться установкой актуальных библиотек — DirectX и .NET Framework. Они так или иначе используются практически во всех современных играх:

- Скачать DirectX

- Скачать Microsoft .NET Framework 3.5

- Скачать Microsoft .NET Framework 4

- Скачать Microsoft Visual C++ 2005 Service Pack 1

- Скачать Microsoft Visual C++ 2008 (32-бит) (Скачать Service Pack 1)

- Скачать Microsoft Visual C++ 2008 (64-бит) (Скачать Service Pack 1)

- Скачать Microsoft Visual C++ 2010 (32-бит) (Скачать Service Pack 1)

- Скачать Microsoft Visual C++ 2010 (64-бит) (Скачать Service Pack 1)

- Скачать Microsoft Visual C++ 2012 Update 4

- Скачать Microsoft Visual C++ 2013

Sword Art Online: Alicization Lycoris выдает ошибку об отсутствии DLL-файла. Решение

Как правило, проблемы, связанные с отсутствием DLL-библиотек, возникают при запуске Sword Art Online: Alicization Lycoris, однако иногда игра может обращаться к определенным DLL в процессе и, не найдя их, вылетать самым наглым образом.

Чтобы исправить эту ошибку, нужно найти необходимую библиотеку DLL и установить ее в систему. Проще всего сделать это с помощью программы DLL-fixer, которая сканирует систему и помогает быстро найти недостающие библиотеки.

Если ваша проблема оказалась более специфической или же способ, изложенный в данной статье, не помог, то вы можете спросить у других пользователей в нашей рубрике «Вопросы и ответы». Они оперативно помогут вам!

Благодарим за внимание!

Что сделать в первую очередь

- Скачайте и запустите всемирно известный CCleaner (скачать по прямой ссылке) — это программа, которая очистит ваш компьютер от ненужного мусора, в результате чего система станет работать быстрее после первой же перезагрузки;

- Обновите все драйверы в системе с помощью программы Driver Updater (скачать по прямой ссылке) — она просканирует ваш компьютер и обновит все драйверы до актуальной версии за 5 минут;

- Установите Advanced System Optimizer (скачать по прямой ссылке) и включите в ней игровой режим, который завершит бесполезные фоновые процессы во время запуска игр и повысит производительность в игре.

GPU support¶

Thanks to Theano, Lasagne transparently supports training your networks on a

GPU, which may be 10 to 50 times faster than training them on a CPU. Currently,

this requires an NVIDIA GPU with CUDA support, and some additional software for

Theano to use it.

CUDA

Install the latest CUDA Toolkit and possibly the corresponding driver available

from NVIDIA: https://developer.nvidia.com/cuda-downloads

Closely follow the Getting Started Guide linked underneath the download table

to be sure you don’t mess up your system by installing conflicting drivers.

After installation, make sure /usr/local/cuda/bin is in your PATH, so

nvcc --version works. Also make sure /usr/local/cuda/lib64 is in your

LD_LIBRARY_PATH, so the toolkit libraries can be found.

Theano

If CUDA is set up correctly, the following should print some information on

your GPU (the first CUDA-capable GPU in your system if you have multiple ones):

THEANO_FLAGS=device=gpu python -c "import theano; print(theano.sandbox.cuda.device_properties(0))"

To configure Theano to use the GPU by default, create a file .theanorc

directly in your home directory, with the following contents:

floatX = float32 device = gpu

Optionally add allow_gc = False for some extra performance at the expense

of (sometimes substantially) higher GPU memory usage.

If you run into problems, please check Theano’s instructions for Using the GPU.

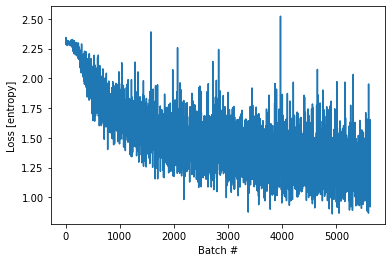

Run the MNIST example¶

In this first part of the tutorial, we will just run the MNIST example that’s

included in the source distribution of Lasagne.

We assume that you have already run through the . If you

haven’t done so already, get a copy of the source tree of Lasagne, and navigate

to the folder in a terminal window. Enter the examples folder and run the

mnist.py example script:

cd examples python mnist.py

If everything is set up correctly, you will get an output like the following:

Using gpu device 0: GeForce GT 640 Loading data... Downloading train-images-idx3-ubyte.gz Downloading train-labels-idx1-ubyte.gz Downloading t10k-images-idx3-ubyte.gz Downloading t10k-labels-idx1-ubyte.gz Building model and compiling functions... Starting training... Epoch 1 of 500 took 1.858s training loss: 1.233348 validation loss: 0.405868 validation accuracy: 88.78 % Epoch 2 of 500 took 1.845s training loss: 0.571644 validation loss: 0.310221 validation accuracy: 91.24 % Epoch 3 of 500 took 1.845s training loss: 0.471582 validation loss: 0.265931 validation accuracy: 92.35 % Epoch 4 of 500 took 1.847s training loss: 0.412204 validation loss: 0.238558 validation accuracy: 93.05 % ...

Where to go from here¶

This finishes our introductory tutorial. For more information on what you can

do with Lasagne’s layers, just continue reading through Layers and

Creating custom layers.

More tutorials, examples and code snippets can be found in the Lasagne

Recipes repository.

Finally, the reference lists and explains all layers (),

weight initializers (), nonlinearities

(), loss expressions (),

training methods () and regularizers

() included in the library, and should also make

it simple to create your own.

| Improving neural networks by preventing co-adaptation of feature detectors. http://arxiv.org/abs/1207.0580 |

Lasagne

Lasagne is a lightweight library to build and train neural networks in Theano.

Its main features are:

- Supports feed-forward networks such as Convolutional Neural Networks (CNNs),

recurrent networks including Long Short-Term Memory (LSTM), and any

combination thereof - Allows architectures of multiple inputs and multiple outputs, including

auxiliary classifiers - Many optimization methods including Nesterov momentum, RMSprop and ADAM

- Freely definable cost function and no need to derive gradients due to

Theano’s symbolic differentiation - Transparent support of CPUs and GPUs due to Theano’s expression compiler

- Simplicity: Be easy to use, easy to understand and easy to extend, to

facilitate use in research - Transparency: Do not hide Theano behind abstractions, directly process and

return Theano expressions or Python / numpy data types - Modularity: Allow all parts (layers, regularizers, optimizers, …) to be

used independently of Lasagne - Pragmatism: Make common use cases easy, do not overrate uncommon cases

- Restraint: Do not obstruct users with features they decide not to use

- Focus: «Do one thing and do it well»

Installation

In short, you can install a known compatible version of Theano and the latest

Lasagne development version via:

pip install -r https://raw.githubusercontent.com/Lasagne/Lasagne/master/requirements.txt pip install https://github.com/Lasagne/Lasagne/archive/master.zip

Example

import lasagne

import theano

import theano.tensor as T

# create Theano variables for input and target minibatch

input_var = T.tensor4('X')

target_var = T.ivector('y')

# create a small convolutional neural network

from lasagne.nonlinearities import leaky_rectify, softmax

network = lasagne.layers.InputLayer((None, 3, 32, 32), input_var)

network = lasagne.layers.Conv2DLayer(network, 64, (3, 3),

nonlinearity=leaky_rectify)

network = lasagne.layers.Conv2DLayer(network, 32, (3, 3),

nonlinearity=leaky_rectify)

network = lasagne.layers.Pool2DLayer(network, (3, 3), stride=2, mode='max')

network = lasagne.layers.DenseLayer(lasagne.layers.dropout(network, 0.5),

128, nonlinearity=leaky_rectify,

W=lasagne.init.Orthogonal())

network = lasagne.layers.DenseLayer(lasagne.layers.dropout(network, 0.5),

10, nonlinearity=softmax)

# create loss function

prediction = lasagne.layers.get_output(network)

loss = lasagne.objectives.categorical_crossentropy(prediction, target_var)

loss = loss.mean() + 1e-4 * lasagne.regularization.regularize_network_params(

network, lasagne.regularization.l2)

# create parameter update expressions

params = lasagne.layers.get_all_params(network, trainable=True)

updates = lasagne.updates.nesterov_momentum(loss, params, learning_rate=0.01,

momentum=0.9)

# compile training function that updates parameters and returns training loss

train_fn = theano.function(, loss, updates=updates)

# train network (assuming you've got some training data in numpy arrays)

for epoch in range(100):

loss =

for input_batch, target_batch in training_data:

loss += train_fn(input_batch, target_batch)

print("Epoch %d: Loss %g" % (epoch + 1, loss len(training_data)))

# use trained network for predictions

test_prediction = lasagne.layers.get_output(network, deterministic=True)

predict_fn = theano.function(, T.argmax(test_prediction, axis=1))

print("Predicted class for first test input: %r" % predict_fn(test_data[]))

Предисловие или лирическое отступление о библиотеках для глубокого обучения

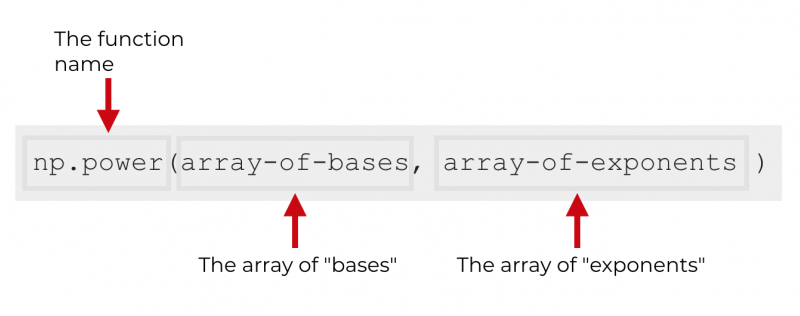

В настоящее время разработаны десятки библиотек для работы с нейронными сетями, все они, подчас существенно, различаются в реализации, но можно выявить два основных подхода: императивный и символьный.

Давайте посмотрим на примере, чем они различаются. Предположим, что мы хотим вычислить простое выражение

Вот так оно выглядело бы в императивном изложении на языке python:

Интерпретатор исполняет код построчно, сохраняя результаты в переменных , , и .

Та же программа в символьной парадигме выглядела бы так:

Существенное различие заключается в том, что когда мы объявляем , исполнения не происходит, мы лишь задали граф вычислений, который затем скомпилировали и наконец выполнили.

Оба подхода имеют свои достоинства и недостатки. В первую очередь, императивные программы гибче, нагляднее и проще в отладке. Мы можем использовать все богатства используемого языка программирования, например, циклы и ветвления, выводить промежуточные результаты в отладочных целях. Такая гибкость достигается, в первую очередь, малыми ограничениями, накладываемыми на интерпретатор, который должен быть готов к любому последующему использованию переменных.

С другой стороны, символьная парадигма накладывает больше ограничений, но вычисления получаются более эффективными, как по памяти, так и по скорости исполнения: на этапе компиляции можно применить ряд оптимизаций, выявить неиспользуемые переменные, выполнить часть вычислений, переиспользуя память и так далее. Отличительная черта символьных программ — отдельные этапы объявления графа, компиляции и выполнения.

Мы останавливаемся на этом так подробно, потому что императивная парадигма знакома большинству программистов, в то время как символьная может показаться непривычной, и Theano, как раз, явный пример символьного фреймворка.

Тем, кому хочется разобраться в этом вопросе подробнее, рекомендую почитать соответствующий раздел документации к MXNet (об этой библиотеке мы еще напишем отдельный пост), но ключевой момент для понимания дальнейшего текста заключается в том, что программируя на Theano, мы пишем на python программу, которую потом скомпилируем и выполним.

Но довольно теории, давайте разбираться с Theano на примерах.

Основы

Первые шаги

Теперь, когда всё установлено и настроено, давайте попробуем написать немного кода, например, вычислим значение многочлена в точке 10:

Здесь мы совершили 4 вещи: определили скалярную переменную типа , создали выражение, содержащее наш многочлен, определили и скомпилировали функцию , а также выполнили её, передав на вход число 10.

Обратим внимание на тот факт, что переменные в Theano — типизированные, причем тип переменной содержит информацию как о типе данных, так и о их размерности, т.е. чтобы посчитать наш многочлен сразу в нескольких точках, потребуется определить как вектор:

В данном случае нам нужно только указать количество измерений переменной при инициализации: размер каждого измерения вычисляется автоматически на этапе вызова функции.

UPD: Не секрет, что аппарат линейной алгебры повсеместно используется в машинном обучении: примеры описываются векторами признаков, параметры модели записывают в виде матриц, изображения представляют в виде 3х-мерных тензоров. Скалярные величины, векторы и матрицы можно рассматривать как частный случай тензоров, поэтому именно так мы в дальнейшем будем называть эти объекты линейной алгебры. Под тензором будем понимать -мерные массивы чисел.

Пакет содержит наиболее часто употребляемые , однако, нетрудно .

При несовпадении типов Theano выбросит исключение. Исправить это, кстати как и поменять многое другое в работе функций, можно, передав конструктору аргумент :

Мы также можем вычислять несколько выражений сразу, оптимизатор в этом случае может переиспользовать пересекающиеся части, в данном случае сумму :

Для обмена состояниями между функциями используются специальные shared переменные:

Значения таких переменных, в отличие от тензорных, можно получать и модифицировать вне Theano-функций из обычного python-кода:

Значения в shared переменных можно «подставлять» в тензорные переменные:

Prerequisites¶

Python + pip

Lasagne currently requires Python 2.7 or 3.4 to run. Please install Python via

the package manager of your operating system if it is not included already.

Python includes pip for installing additional modules that are not shipped

with your operating system, or shipped in an old version, and we will make use

of it below. We recommend installing these modules into your home directory

via --user, or into a virtual environment

via virtualenv.

C compiler

Theano requires a working C compiler, and numpy/scipy require a compiler as

well if you install them via pip. On Linux, the default compiler is usually

gcc, and on Mac OS, it’s clang. Again, please install them via the

package manager of your operating system.

numpy/scipy + BLAS

Lasagne requires numpy of version 1.6.2 or above, and Theano also requires

scipy 0.11 or above. Numpy/scipy rely on a BLAS library to provide fast linear

algebra routines. They will work fine without one, but a lot slower, so it is

worth getting this right (but this is less important if you plan to use a GPU).

If you install numpy and scipy via your operating system’s package manager,

they should link to the BLAS library installed in your system. If you install

numpy and scipy via pip install numpy and pip install scipy, make sure

to have development headers for your BLAS library installed (e.g., the

libopenblas-dev package on Debian/Ubuntu) while running the installation

command. Please refer to the numpy/scipy build instructions if in doubt.

Quickly retrieve forgotten or lost passwords from the most popular web browsers, email clients and even your computer’s system with the help of this command-line tool

What’s new in LaZagne 2.4:

- Big code review and lots of bug fixed

- PEP8 Style

- Pycrypto denpendency removed

- Adding pypykatz module

Read the full changelog

Let us say that you have forgotten an important password and you stubbornly decide not go through the whole ‘Forgot Password’ process.

Light password retrieval command-line tool

If this is your case, then you can give LaZagne a try and hope that it may work for whatever password you are trying to retrieve.

It is recommended that you run it with full system rights

In order to start using this program, just unzip its archive, open an instance of Command Prompt on your computer, load it accordingly.

This is probably the best time to point out that you should run the application with full administrative rights, especially if you are hoping to retrieve Wi-fi and Windows passwords.

Once you have launched the program, it automatically displays a series of useful modules that you can use in order to get the best out of it.

Straightforward functionality

This said, you can choose to run individual modules just for chats, mails, git, svn, databases, wifi, sysadmin, browser or even games or you could just run the versatile ‘All’ module and manually search for the result that most interests you after.

The results should be displayed in a matter of seconds, at the very most. The app points out the usernames, passwords and tagetname’s for the apps or services it has found passwords.

In addition, you can also configure the program to run specific scripts and even write all the found passwords to a text or JSON file.

Inconclusive results

Before we conclude, our tests were concluded with decent but somewhat inconclusive results, as LaZagne was indeed able to find a series of credentials quite quickly.

Despite this, we would not recommend that you fully rely on this tool to retrieve forgotten passwords, as the tool was unable to find our Windows password, or Windows account’s password and other important credentials such as this.

Granted, we have tested the application on Windows 10 which is, evidently, the safest Windows to date, so there is a good probability for better results on older version of Windows instead.

All in all, LaZagne is a useful and lightweight utility that may be used to quickly retrieve passwords but you should not expect miracles, since the app is clearly in need of some extra development work before it can be called dependable. Until then, it’s just hit and miss, we are afraid.

Lasagne or lasagna?

In Italian, lasagne is the name given to those flat rectangular sheets of pasta most non-Italians call lasagna. But actually, lasagna is the singular of lasagne. Most pasta names in Italian are used in the plural form because recipes usually involve more than one piece of pasta! This is why I often find myself using plural verb forms when I write or talk about a particular pasta. After 14 years in Italy, it seems to come more naturally to say ‘spaghetti are..’ instead of ‘spaghetti is…’! If you’ve read some of my other blog posts you may have noticed this habit! In general, when talking about the pasta, Italians use the word ‘lasagne’, but for recipes they sometimes use ‘lasagna al forno’ too.

A little lasagne history!

Lasagne are probably one of the oldest forms of pasta. The ancient Romans ate a dish known as ‘lasana’ or ‘lasanum’ which is believed to have been similar to today’s lasagne al forno (baked lasagna). This was a thin sheet of dough made from wheat flour, which was baked in the oven or directly on the fire. Some food historians believe this pasta to be even older, claiming that the word originally comes from the Ancient Greek word laganon and was ‘borrowed’ by the Romans. In both cases, the original words referred to a cooking pot and eventually the dish was named after the ‘pot’ it was prepared in.

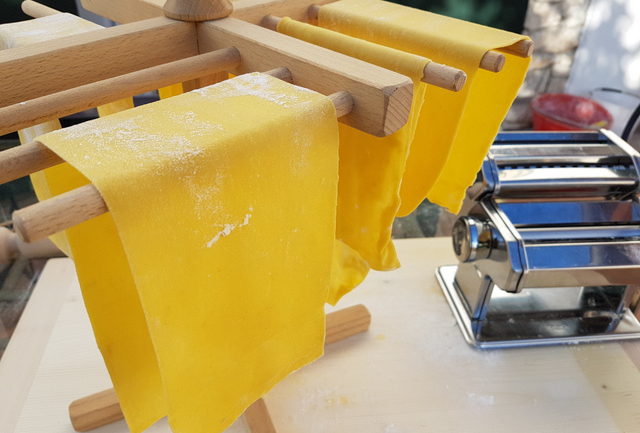

Homemade pasta sheets

In the Middle Ages, baked lasagne became so widespread that many Italian poets and writers mentioned it in their writings. Many of the recipes from the Middle Ages onwards describe a dish more like the one we know today which had layers of pasta sheets that were cooked with meat and or cheese between them. However, it wasn’t until tomatoes started to be used in Italian cooking around 1800 that lasagne al forno started to look more like the dish many of us call ‘lasagna’.

Red or white?

Having said that, not all Italian recipes for ‘lasagna al forno’ contain tomatoes. There are many ‘white’ recipes too. Here in Northern Italy, these dishes (red and white) are also called ‘pasticcio’. However, although pasticcio has layers of pasta with various sauces baked in the oven, it isn’t always made with lasagne pasta.

Different Italian baked lasagne recipes.

Today, lasagne al forno is a classic dish in many Italian regions and one of only two ways Italians prepare this pasta. The other is to roll filled the pasta sheets to make fresh cannelloni or manicotti. Baked lasagne recipes vary from region to region. In Emilia-Romagna and most of Northern Italy, it is made with fresh or dried egg pasta and a classic bolognese sauce, Parmigiano-Reggiano cheese and bechamel sauce. In Emilia-Romagna they also often use green lasagne. While Neapolitan lasagna, a typical carnival dish in Campania, is prepared with Neapolitan ragu, meatballs, cow’s ricotta, provola and pecorino cheese. The pasta sheets used in the south are usually dried and sometimes made without egg.

Baked Lasagna alla Norma

In some mountain areas, the ragu or meat sauce is often replaced by mushrooms. In Liguria, they sometimes use pesto instead of ragu and in Veneto, red radicchio from Treviso. In Umbria and in Marche there is a particular version, called ‘vincisgrassi’, in which the ragu is enriched with chicken or pork offal. In the Apennines, the ragu is replaced by a filling of porcini, truffles and pecorino. Also, in Sicily, there is also the ‘alla Norma’ version with eggplants or they add boiled eggs to the dish.

Lasagne al forno mushrooms and burrata

Baked lasagne recipes on the blog.

I’m sure my list of different lasagne al forno recipes is not complete and there are other typical versions in various regions. However, suffice to say that this pasta is popular throughout the Italian peninsula and certainly the most known version, outside of Italy, is the one from Emilia-Romagna. If you like lasagna al forno, I am certain you will enjoy trying out some of the lesser known recipes I have included here on the Pasta Project. One of my favourites is a ‘white’ lasagna al forno from Puglia which is made with mushrooms and burrata. Believe me when I say it’s amazingly delicious and since there’s no meat included, perfect for vegetarians too!

Here in Italy, lasagne sheets are often homemade or bought fresh as many supermarkets stock fresh sheets. This pasta is quite easy to make at home. If you wish to give it a try, do have a look at my recipe for homemade lasagne. I’m sure you’ll enjoy making it yourself and nothing quite beats the satisfaction of making your own pasta!

Lasagne

Lasagne is a lightweight library to build and train neural networks in Theano.

Its main features are:

- Supports feed-forward networks such as Convolutional Neural Networks (CNNs),

recurrent networks including Long Short-Term Memory (LSTM), and any

combination thereof - Allows architectures of multiple inputs and multiple outputs, including

auxiliary classifiers - Many optimization methods including Nesterov momentum, RMSprop and ADAM

- Freely definable cost function and no need to derive gradients due to

Theano’s symbolic differentiation - Transparent support of CPUs and GPUs due to Theano’s expression compiler

- Simplicity: Be easy to use, easy to understand and easy to extend, to

facilitate use in research - Transparency: Do not hide Theano behind abstractions, directly process and

return Theano expressions or Python / numpy data types - Modularity: Allow all parts (layers, regularizers, optimizers, …) to be

used independently of Lasagne - Pragmatism: Make common use cases easy, do not overrate uncommon cases

- Restraint: Do not obstruct users with features they decide not to use

- Focus: «Do one thing and do it well»

Installation

In short, you can install a known compatible version of Theano and the latest

Lasagne development version via:

pip install -r https://raw.githubusercontent.com/Lasagne/Lasagne/master/requirements.txt pip install https://github.com/Lasagne/Lasagne/archive/master.zip

Example

import lasagne

import theano

import theano.tensor as T

# create Theano variables for input and target minibatch

input_var = T.tensor4('X')

target_var = T.ivector('y')

# create a small convolutional neural network

from lasagne.nonlinearities import leaky_rectify, softmax

network = lasagne.layers.InputLayer((None, 3, 32, 32), input_var)

network = lasagne.layers.Conv2DLayer(network, 64, (3, 3),

nonlinearity=leaky_rectify)

network = lasagne.layers.Conv2DLayer(network, 32, (3, 3),

nonlinearity=leaky_rectify)

network = lasagne.layers.Pool2DLayer(network, (3, 3), stride=2, mode='max')

network = lasagne.layers.DenseLayer(lasagne.layers.dropout(network, 0.5),

128, nonlinearity=leaky_rectify,

W=lasagne.init.Orthogonal())

network = lasagne.layers.DenseLayer(lasagne.layers.dropout(network, 0.5),

10, nonlinearity=softmax)

# create loss function

prediction = lasagne.layers.get_output(network)

loss = lasagne.objectives.categorical_crossentropy(prediction, target_var)

loss = loss.mean() + 1e-4 * lasagne.regularization.regularize_network_params(

network, lasagne.regularization.l2)

# create parameter update expressions

params = lasagne.layers.get_all_params(network, trainable=True)

updates = lasagne.updates.nesterov_momentum(loss, params, learning_rate=0.01,

momentum=0.9)

# compile training function that updates parameters and returns training loss

train_fn = theano.function(, loss, updates=updates)

# train network (assuming you've got some training data in numpy arrays)

for epoch in range(100):

loss =

for input_batch, target_batch in training_data:

loss += train_fn(input_batch, target_batch)

print("Epoch %d: Loss %g" % (epoch + 1, loss len(training_data)))

# use trained network for predictions

test_prediction = lasagne.layers.get_output(network, deterministic=True)

predict_fn = theano.function(, T.argmax(test_prediction, axis=1))

print("Predicted class for first test input: %r" % predict_fn(test_data[]))